Federal

Aviation

Administration

Version 1.0

November 6, 2023

UTM Field Test (UFT)

Final Report

Federal Aviation Administration

Advanced Concepts Branch, ANG-C2

NextGen Technology Development & Prototyping Division

800 Independence Avenue SW

Washington, DC 20591

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

i

PAGE LEFT INTENTIONALLY BLANK

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

ii

Executive Summary

The Unmanned Aircraft Systems (UAS) Traffic Management (UTM) Field Test (UFT) was an

important activity for validating and field testing the next set of industry and Federal Aviation

Administration (FAA) capabilities needed to support UTM. The activities within the UFT project

helped to bring UTM further towards future implementations of operational UTM services. UFT

was established as an important component in continuing the collaboration between FAA, National

Aeronautics and Space Administration (NASA), and industry to mature UTM.

The FAA, NASA, and industry partners worked to demonstrate important capabilities during UFT,

including:

• Capabilities proposed by standards including strategic coordination.

• Enhancements to UTM functionalities (e.g., data correlation).

• Updated security management to secure UTM data exchanges.

• Concept elements such as authorized historical data queries.

UFT used several components in the UTM ecosystem including UAS Service Suppliers (USSs),

FAA’s Flight Information Management System (FIMS), Discovery and Synchronization Service

(DSS), and authorization servers. The partners providing these capabilities for UFT included:

• Test Sites: New York UAS Test Site (NYUASTS) and Mid-Atlantic Aviation Partnership

(MAAP) with the Lone Star UAS Center of Excellence and Innovation (LSUASC).

• Industry USS Partners: ANRA, AX Enterprize, CAL Analytics, Collins, OneSky, Wing.

UFT started in July of 2022 with test activities completed in April of 2023. The testing evaluated

various elements of the ASTM USS Interoperability Standard, including strategic conflict

detection, conformance monitoring, constraint management and processing, and priority

operations. UFT provided useful insights to inform the FAA and industry as UTM transitions from

research and development into implementation of UTM services, including the following.

• The increase in relevant operational information provided to operators helped to increase

situational awareness and improve operator’s ability to plan or re-plan their flight.

• The automated test harness concept proved effective in verifying USS functionality.

• UFT developed and tested key ASTM standard elements for strategic deconfliction.

Further progress is needed to address implementation gaps of the ASTM standard, such as

availability arbitration and aggregated intent conformance monitoring.

• Industry should evaluate important governance issues, such as service quality, and ensure

agreement on the approach to meet the FAA requirements on safety, security, and

privacy. This supports maturation of elements in UTM such as Cooperative Operating

Practices (COPs) and authorization server implementation.

• UFT validated that the ASTM standard should support strategic deconfliction and

conformance monitoring among multiple USSs and operators. Further maturation of UTM

services requires evaluation through real-world operations.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

iii

Version History

Date

Revision

Version

11/6/2023

Initial Release

1.0

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

iv

Table of Contents

Executive Summary ................................................................................................................. ii

1 Introduction .......................................................................................................................1

1.1 Progression of UAS Traffic Management (UTM) ........................................................1

1.2 Scope ...........................................................................................................................2

2 UTM Field Test (UFT) Overview ......................................................................................2

2.1 Key Elements of UFT ..................................................................................................3

2.1.1 UFT Architecture .....................................................................................................3

2.1.2 UAS Service Supplier (USS)....................................................................................4

2.1.3 Flight Information Management System (FIMS) ......................................................5

2.1.4 Additional Industry Services ....................................................................................5

2.1.5 Message Security .....................................................................................................6

2.2 UFT Partners and FAA Support ...................................................................................7

2.2.1 Test Site Partners .....................................................................................................7

2.2.2 NextGen Integration and Evaluation Capability (NIEC) Lab ....................................8

2.2.3 NASA ......................................................................................................................8

2.3 Operating Environments ..............................................................................................9

2.3.1 New York UAS Test Site (NYUASTS) ....................................................................9

2.3.2 Mid Atlantic Aviation Partnership (MAAP) .............................................................9

3 UFT Execution ................................................................................................................. 10

3.1 Test Approach ........................................................................................................... 10

3.1.1 Complexity ............................................................................................................ 10

3.2 Data Collection Approach .......................................................................................... 11

3.2.1 Measures of Effectiveness (MOEs) ........................................................................ 11

3.3 Entity Onboarding ..................................................................................................... 12

3.4 Checkout ................................................................................................................... 12

3.4.1 Industry Led Checkout ........................................................................................... 13

3.4.2 FAA Led Checkout ................................................................................................ 13

3.5 Shakedowns............................................................................................................... 13

3.5.1 Shakedown 1 ......................................................................................................... 13

3.5.2 Shakedown 2 ......................................................................................................... 14

3.6 Final Showcase .......................................................................................................... 15

4 Demonstrated Capabilities .............................................................................................. 17

4.1 Operational Complexity ............................................................................................. 17

4.1.1 Analysis ................................................................................................................. 18

4.2 Strategic Deconfliction .............................................................................................. 20

4.2.1 Analysis ................................................................................................................. 22

4.2.2 Observations .......................................................................................................... 24

4.3 Priority Operations .................................................................................................... 24

4.3.1 Analysis ................................................................................................................. 25

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

v

4.3.2 Observations .......................................................................................................... 27

4.4 Conformance Monitoring ........................................................................................... 28

4.4.1 Analysis ................................................................................................................. 29

4.4.2 Observations .......................................................................................................... 31

4.5 Constraint Management and Processing ..................................................................... 31

4.5.1 Analysis ................................................................................................................. 32

4.5.2 Observations .......................................................................................................... 35

4.6 Data Correlation ........................................................................................................ 36

4.6.1 Analysis ................................................................................................................. 39

4.6.2 Observations .......................................................................................................... 39

4.7 Historical Query ........................................................................................................ 40

4.7.1 Analysis ................................................................................................................. 41

4.7.2 Observations .......................................................................................................... 42

4.8 Authorization Servers ................................................................................................ 42

4.8.1 Observations .......................................................................................................... 43

4.9 Message Security ....................................................................................................... 43

4.9.1 Analysis ................................................................................................................. 44

4.9.2 Observations .......................................................................................................... 46

4.10 Test Harness .............................................................................................................. 47

4.10.1 Observations ...................................................................................................... 47

5 Conclusion 48

5.1 Summary of Observations .......................................................................................... 48

5.2 Next Steps ................................................................................................................. 49

Appendix A Scenarios ....................................................................................................... 50

Appendix A.1 Shakedown Scenarios ................................................................................. 50

Appendix B UFT Aircraft ................................................................................................ 51

Appendix C UAS Test Site’s Partner USS Summaries ................................................... 52

Appendix C.1 ANRA ........................................................................................................ 52

Appendix C.2 AX Enterprize ............................................................................................ 52

Appendix C.3 CAL Analytics ........................................................................................... 52

Appendix C.4 Collins Aerospace ...................................................................................... 52

Appendix C.5 OneSky ...................................................................................................... 52

Appendix C.6 Wing .......................................................................................................... 53

Appendix D Method for Calculating UAS Operational Density ..................................... 54

Appendix E References..................................................................................................... 55

Appendix F Acronyms ...................................................................................................... 56

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

vi

List of Figures

Figure 1: UTM High-Level Architecture .....................................................................................2

Figure 2: UFT High-Level Architecture ......................................................................................4

Figure 3: NYUASTS Operating Areas.........................................................................................9

Figure 4: MAAP Operating Environments (MAAP shown on left, LSUASC shown on right) .....9

Figure 5: UFT Test Approach Stages......................................................................................... 10

Figure 6: Operations Flown During Final Showcase Activities .................................................. 16

Figure 7: NYUASTS Operational Density ................................................................................. 18

Figure 8: NYUASTS Density by State ...................................................................................... 19

Figure 9: MAAP Tempo/Density by Use Case .......................................................................... 19

Figure 10: MAAP Density by State ........................................................................................... 20

Figure 11: Display of Strategically Deconflicted Operations ..................................................... 21

Figure 12: NYUASTS Replans by Cause and Stage .................................................................. 22

Figure 13: MAAP Operational Intent Replans ........................................................................... 23

Figure 14: MAAP Operational Intent Replan Heatmap .............................................................. 23

Figure 15: Non-Coordinated Off-Nominal Volumes .................................................................. 28

Figure 16: USS Constraint Displays .......................................................................................... 34

Figure 17: FAA Data Correlation User Interfaces ...................................................................... 37

Figure 18: AX Enterprize Remote ID App with Data Correlation .............................................. 37

Figure 19: ANRA, Collins, and OneSky Data Correlation Displays ........................................... 38

Figure 20: Data Correlation Metrics .......................................................................................... 39

Figure 21: Historical Query User Interface ................................................................................ 41

Figure 22: Comparing the Timing of Correlation Queries with Encryption Both On and Off ..... 46

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

vii

List of Tables

Table 1: Test Site Partners ...........................................................................................................7

Table 2: UFT MOEs.................................................................................................................. 11

Table 3: NYUASTS Showcase Scenarios .................................................................................. 15

Table 4: MAAP Showcase Scenarios ........................................................................................ 16

Table 5: Demonstrated Capability to MOE Mapping ................................................................. 17

Table 6: Operational Complexity Metrics .................................................................................. 17

Table 7: Strategic Deconfliction Metrics ................................................................................... 21

Table 8: NYUASTS Attempts for Accepted Operation .............................................................. 22

Table 9: MAAP Attempts for Accepted Operation .................................................................... 23

Table 10: Strategic Deconfliction Observations ......................................................................... 24

Table 11: Priority Operations Metrics ........................................................................................ 25

Table 12: Priority Ops Created vs. Conflicts Detected ............................................................... 26

Table 13: Replan Time Due to Higher Priority Operation .......................................................... 26

Table 14: Priority Ops Created vs. Conflicts Detected ............................................................... 26

Table 15: Replan Time Due to Higher Priority Operation .......................................................... 27

Table 16: Priority Operations Observations ............................................................................... 27

Table 17: Conformance Monitoring Metrics .............................................................................. 28

Table 18: NYUASTS Off-Nominal Operations ......................................................................... 29

Table 19: NYUASTS Latency Sharing Off-Nominal Operations ............................................... 29

Table 20: MAAP Off-Nominal Operations ................................................................................ 30

Table 21: MAAP Latency Sharing Off-Nominal Operations ...................................................... 30

Table 22: Conformance Monitoring Observations ..................................................................... 31

Table 23: Constraint Metrics ..................................................................................................... 32

Table 24: NYUASTS Constraints Ingested ................................................................................ 33

Table 25: NYUASTS Replan Time Due to Constraints ............................................................. 33

Table 26: NYUASTS Conflict Notification to Operator ............................................................ 33

Table 27: Constraints Created vs. Conflicts Caused ................................................................... 34

Table 28: Replan Time Due to Constraints ................................................................................ 35

Table 29: MAAP Conflict Notification to Operator ................................................................... 35

Table 30: Constraint Management Observations........................................................................ 35

Table 31: Data Correlation Metrics ........................................................................................... 38

Table 32: Data Correlation Observations ................................................................................... 40

Table 33: Historical Query Metrics ........................................................................................... 41

Table 34: Historical Query Observations ................................................................................... 42

Table 35: Authorization Servers Observations ........................................................................... 43

Table 36: Cybersecurity Metrics ................................................................................................ 44

Table 37: Message Security Observations ................................................................................. 46

Table 38: Test Harness Observations ......................................................................................... 47

Table 39: Shakedown Scenarios ................................................................................................ 50

Table 40: UFT Aircraft ............................................................................................................. 51

Table 41: Acronyms .................................................................................................................. 56

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

1

1 Introduction

The Unmanned Aircraft Systems (UAS) Traffic Management (UTM) Field Test (UFT) is an

important activity for developing, expanding, validating, and field testing the next set of industry

and Federal Aviation Administration (FAA) capabilities needed to support UTM. UFT validation

and testing focuses on the technical feasibility of UTM capabilities and standards. While UFT

observations are used to inform policy development, they do not imply any policy decisions. In

winter and spring of 2023, the FAA, National Aeronautics and Space Administration (NASA), and

industry partners successfully completed UFT test and evaluation activities. This final report

documents and concludes the UFT project.

1.1 Progression of UAS Traffic Management (UTM)

UTM is the way the FAA will support UAS operations conducted in low-altitude airspace. UTM

utilizes industry’s ability to supply services under the FAA’s regulatory authority. It is a

community-based, cooperative traffic management system in which operators, UAS Service

Suppliers (USSs), and other participants are responsible for the coordination, execution, and

management of operations, with rules established by the FAA. Due to this cooperative nature, it

will be important for industry to define FAA-approved UTM Cooperative Operating Practices

(COPs) that address how operators manage their operations. Implementation of a safe and efficient

UTM service environment, including supporting infrastructure, is necessary to enable the

incorporation of routine Beyond Visual Line of Sight (BVLOS) operations in low-altitude airspace

(i.e., below 400 feet Above Ground Level [AGL]).

To support UTM implementation, collaborative research and test activities have been established.

This started with the UTM Research Transition Team (RTT) Technical Capability Level (TCL)

demonstration activities, which concluded in 2020. As technologies and capabilities were

transferred to the FAA, the UTM Pilot Program (UPP) was established to support deployment of

UTM capabilities within FAA systems and concluded in 2021 with the release of the Phase 2 Final

Report [1]. Continuing the collaboration between the FAA, NASA, and industry, UFT was

established to execute flight test activities, support industry in validating standards, and evaluate

the maturation of UTM services.

UTM development and implementation establishes requisite services, roles and responsibilities,

data exchange protocols, and performance requirements to enable the management of low-altitude

UAS operations. Figure 1 is the high-level UTM architecture.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

2

Figure 1: UTM High-Level Architecture

1.2 Scope

This document provides a report of UFT test and evaluation results. The document uses the

following structure.

• Section 2 includes an overview of UFT, which details demonstrated capabilities, key

elements that were a focus of UFT activities, test sites/supporting participants, and test site

operating environments.

• Section 3 provides a summary of the execution of UFT activities including the test and data

collection approach, entity onboarding, checkouts, shakedown tests, and final showcase

activities.

• Section 4 provides details across the various demonstrated capabilities, which includes

relevant data and analysis and observations.

• Section 5 provides a conclusion for UFT and discusses the next steps as they relate to UTM

implementation.

2 UTM Field Test (UFT) Overview

UFT was established as an important component in continuing the collaboration between FAA,

NASA, and industry as they mature UTM concepts, services, and standards. In July 2022, the FAA

selected two FAA UAS test sites to partner with for UFT development, testing, and evaluation

activities.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

3

• Virginia Tech (VT), Mid Atlantic Aviation Partnership (MAAP) with the Texas A&M

University-Corpus Christi’s Lone Star UAS Center of Excellence and Innovation (LSUASC)

• New York UAS Test Site (NYUASTS)

In collaboration with NASA, the selected FAA UAS test sites, industry stakeholders, and public

safety stakeholders, the FAA conducted live flights to support industry in validating standards and

evaluating the maturation of UTM services. The UFT project aimed to:

• Advance capabilities proposed by standards including strategic coordination in complex

environments.

• Test enhancements to UTM functionalities (e.g., data correlation).

• Develop and test updated security management for information exchanges between the

FAA, industry, and authorized entities.

• Explore concept elements such as authorized historical data queries.

• Inform policy development to enable routine UTM operations.

Observations from UFT are used to inform and support many areas, including but not limited to

informing policy developing, maturing UTM standards and technologies, advancing UTM

capabilities, and informing best practices for secure UTM information exchanges.

2.1 Key Elements of UFT

This section provides background information on key UTM elements that are a focus of UFT and

are discussed throughout this report.

2.1.1 UFT Architecture

Figure 2 is the high-level architecture that was used during UFT activities.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

4

Figure 2: UFT High-Level Architecture

2.1.2 UAS Service Supplier (USS)

A USS is an entity that assists UAS operators with meeting UTM operational requirements that

enable safe and efficient use of airspace. A USS may provide three main functions:

• Act as a communications bridge between federated UTM actors to support operators’

abilities to meet the regulatory and operational requirements for UAS operations.

• Provide the operator with information about planned operations in and around a volume of

airspace so that operators can safely and efficiently conduct their mission.

• Archive for the operator their operations data in historical databases as appropriate for

analytics, regulatory, and operator accountability purposes.

In general, these key functions allow for a network of USSs to provide cooperative management

of low-altitude operations without direct FAA involvement. The following terms are defined

within the context of USSs.

• USS Network: The amalgamation of USSs connected to each other, exchanging

information on behalf of subscribed operators. USSs share operational intent data, airspace

constraint information, and other relevant details across the network to ensure shared

situational awareness for UTM participants.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

5

• Discovery and Synchronization Service (DSS): DSS is utilized by USSs to facilitate

automated data exchanges between one another within the USS network. This capability

allows USSs to identify one another and exchange relevant information when USSs are in

the same geographical service area.

The ASTM F3548-21 Standard Specification for UTM USS Interoperability [2] details the

requirements and Application Programming Interfaces (APIs) used to exchange data within the

USS network and with the DSS.

2.1.3 Flight Information Management System (FIMS)

The Flight Information Management System (FIMS) is the FAA’s interface for data exchange

between FAA systems and UTM participants. FIMS enables the exchange of relevant data between

the FAA and the USS network. FIMS also provides a means for approved FAA stakeholders to

query for limited data on UTM operations.

The FAA FIMS prototype was implemented by the FAA Next Generation Air Transportation

System (NextGen) Integration and Evaluation Capability (NIEC) lab at William J. Hughes

Technical Center (WJHTC). The FIMS prototype consists of the following key components.

• FIMS Authorization Server (AuthZ): An OAuth 2.0 compliant authorization server.

OAuth 2.0 is an authorization framework for delegated access to APIs used to protect UTM

APIs from unauthorized access. For UFT FIMS, AuthZ provided authorization services for

data correlation and historical query APIs.

• UAS Data Correlation Capability (UDCC): A prototype data correlation capability to

support authorized queries for information held by the FAA that correlates to information

received from broadcast remote Identification (ID).

• FIMS Authorized User Portal: A prototype web-based user interface accessible to

authorized FAA users that provides the ability to submit data correlation or historical queries.

• FIMS Admin Portal: A prototype web-based user interface used to provide FIMS

administrators access to tools to administer FIMS (e.g., manage USS roles and scopes used

by FIMS AuthZ). For the purposes of test and demonstrations like UFT, the admin portal

provides visualizations for operational intent and constraints for awareness of UTM activities.

• Historical Query: A future concept capability that was prototyped and tested during UFT.

Historical query allows the FAA to obtain on-demand access to USS-held data. USS-held

data may include operational intent, Unmanned Aircraft (UA) position info, or constraints.

• Data Collector: A service accessible via an API used to ingest data specific to testing,

validation, and demonstration activities that support analysis and metric generation. The data

collector primarily supported data collection that is identified as the FAA’s responsibility.

2.1.4 Additional Industry Services

An area that UFT explored was industry taking on responsibilities that had been managed, for

demonstration purposes, by government entities in previous demonstration and test activities. The

two key areas where UFT explored this concept were the UTM authorization server and the

checkout process for USSs.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

6

2.1.4.1 Industry Managed USS Test Harness

In UFT, industry participants proposed using a test suite for industry checkouts—specifically, one

developed by the Linux Foundation’s InterUSS platform. This test suite is intended to enable a

USS to validate that it is in alignment with standards, such as the ASTM USS Interoperability

Standard. The test suite allows each USS to test against this test suite independently and the test

suite can also be executed with a group of partners to test interoperability.

2.1.4.2 Industry Authorization Server

The authorization server in UTM serves an important function for securing interactions via the

issuance and management of OAuth 2.0 access tokens to entities in UTM. The industry-hosted

authorization server supported USS-USS data exchanges per the ASTM USS Interoperability

standard.

2.1.5 Message Security

One of the core objectives of UFT was to develop, test, and evaluate approaches to secure

exchanges in the UTM ecosystem. UFT evaluated a series of security objectives that are important

to the UTM ecosystem, specifically authorization, authentication, data integrity, non-repudiation,

and confidentiality. The sections below introduce the security objectives along with a high-level

description of the relevant UFT testing and evaluation activities.

2.1.5.1 Authorization

The federated nature of the UTM ecosystem necessitates that there be Identity Access Management

(IAM) mechanisms in place to ensure that the systems and users acting with UTM have the

appropriate permissions, or authorization, to exchange messages. The OAuth 2.0 framework is an

appropriate approach to achieve the authorization of system-to-system communications by using

a trusted authorization server that issues access tokens to the systems (i.e., USSs and FIMS) in

UTM. The use of OAuth allows for the application of role-based access controls for the USSs

exchanging data in the UTM ecosystem. Testing in UFT evaluated the potential for industry-driven

services to fill this role, including an industry hosted authorization server. The implications of an

industry-hosted authorization server are explored further in Section 4.8.

2.1.5.2 Authentication, Data Integrity, and Non-Repudiation

UTM data exchanges serve critical operational functions, so it is vital that they can be ensured to

have information security protections. Since these exchanges occur over the public internet, it is

important to layer several security approaches to achieve an adequate level of security. For point-

to-point security, UFT data exchanges required the use of Transport Layer Security (TLS). On top

of TLS, these exchanges should apply security to the messages themselves, to maintain

information integrity beyond just a point-to-point connection. The application of digital signatures

to the Hypertext Transfer Protocol (HTTP) communications in the UTM ecosystem provides a

cryptographic mechanism to ensure data integrity and non-repudiation to prevent an entity from

denying having sent a message.[3] If the signatures are linked to a trusted Public Key Infrastructure

(PKI), then the exchange also has the proper authentication.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

7

2.1.5.3 Confidentiality

The UTM ecosystem may contain sensitive data of national security, privacy, or proprietary nature.

Like the needs for point-to-point security for data integrity and authentication, the use of the TLS

protocol provides point-to-point confidentiality protections for UTM data exchanges. For certain

data exchanges, it might be necessary to apply additional confidentiality protections at the message

level. Tests conducted in UFT examined the application of message-level encryption to certain

sensitive data exchanges between the FAA and UTM industry participants, specifically for data

correlation queries by authorized users for FAA-held data. It should be noted that UFT testing did

not include actual sensitive data and used simulated sensitive datasets.

2.2 UFT Partners and FAA Support

As noted in Section 2.2, UTM operations are primarily managed by a federated set of actors,

including UAS operators and the USSs that support them. Given this, it was critical that UTM test

and evaluation activities included a diverse set of stakeholders to ensure the envisioned capabilities

address the varied sets of needs and interests. UFT focused on this need and brought together

various FAA stakeholders, NASA, industry service providers, UAS operators, and public safety

stakeholders to support use cases within the integrated test environment.

2.2.1 Test Site Partners

Table 1 provides overviews of the industry partners and other participating stakeholders who

worked with MAAP and NYUASTS in UFT. The test site oversaw project management for

activities executed at their sites; provided infrastructure/services to support USS and UAS operator

activities; coordinated with the NIEC lab to provide the integrated test environment; and provided

additional support to the FAA, partners, and other stakeholders as needed.

Table 1: Test Site Partners

Partner

UFT Role

MAAP in Partnership with LSUASC

MAAP

Project Management, Operator, Visual Observer,

UAS Platforms

LSUASC

Operator, Visual Observer, UAS Platforms

ANRA Technologies

USS, Operator, Remote ID Devices and Receiver,

Data Correlation Client

Collins Aerospace

USS

OneSky

USS

Wing

Operator, DSS Provider, Industry AuthZ Provider

Raytheon Technologies

Radar Supplemental Data Service Provider (SDSP)

Streamline Designs

Operator, Visual Observer, UAS Platforms

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

8

Partner

UFT Role

AirspaceLink

Constraint Manager

Virginia FIX

Constraint Provider

NYUASTS

NUAIR

Project and Flight Operations Management, Operator,

Ground Crews, Visual Observers, UAS Platforms

ANRA Technologies

USS, Remote ID Receiver, Data Correlation Client

AX Enterprize

USS, Remote ID Devices and Receiver, Operator,

UAS platforms, Data Correlation Client

CAL Analytics

USS

OneSky

USS

Oneida County Sheriff’s Department

Public Safety, Operator

Oneida Indian Nation Police

Department

Public Safety, Operator

USSs provided technologies and services to support live and simulated flights of UA, integrating

them into the test environment and ensuring they conformed to applicable standards and project

requirements. Public safety operated UAS, used constraint services in simulated public safety

conditions, and used broadcast remote ID data to initiate queries to the FAA’s prototype data

correlation capability. Other partners supported in various ways including, providing SDSP

capabilities, operating UAS, constraint management, and others.

2.2.2 NextGen Integration and Evaluation Capability (NIEC) Lab

The FAA NIEC lab provided infrastructure, technologies, and applicable support to enable an

integrated test environment for the test sites and their partners. Activities included, but were not

limited to, software development, alignment to ASTM standards, development of the FAA’s UFT

message security requirements, provision of FIMS components described in Section 2.1.3,

connecting USSs into FIMS infrastructure, and conducting USS checkout processes for data

correlation and historical query. More information on the NIEC can be found in [3].

2.2.3 NASA

As part of the Onboarding and Checkout phase of UFT and in collaboration with the FAA, NASA

hosted an Industry Day. As UFT progressed, NASA participated in the scoping discussions as the

technical scope of the project was being coordinated across the project’s stakeholders. In the later

stages of testing, they provided simulated operations in order to add complexity to the use cases.

This effort was integral in achieving the desired complexity as laid out in the test approach. NASA

was also responsible for creating a message security extension to InterUSS test suite, which

validated USS compliance with the UFT message security requirements. Additionally, throughout

the development and execution of UFT, NASA played an advisory role.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

9

2.3 Operating Environments

This section provides details on the operating environments used by NYUASTS and MAAP to

execute the use cases and scenarios for UFT.

2.3.1 New York UAS Test Site (NYUASTS)

The NYUASTS is a FAA-designated UAS test site located at a towered airport, surrounded by

Class D airspace, and supported by the Low Altitude Authorization and Notification Capability

(LAANC). NYUASTS defined two 15-square-mile operating areas for UFT activities. One area

encompassed the Griffiss International Airport area (labeled: North) and the other is around the

Oriskany flight area (labeled: South) as shown in Figure 3.

Figure 3: NYUASTS Operating Areas

2.3.2 Mid Atlantic Aviation Partnership (MAAP)

For UFT, MAAP supported three different operating environments as shown in Figure 4.

• Virginia Tech’s Kentland Farms: The Kentland Farm Agricultural Research Center is

owned by Virginia Tech and contains the Kentland Experimental Aerial Systems (KEAS)

lab. Kentland Farm is 1,800 acres in size, bordered on the South and West by the New

River, and 2.6 miles corner-to-corner. The airspace over Kentland Farm is Class G from

surface to 700 feet AGL.

• Uptown Christiansburg, VA: Christiansburg Huckleberry Park is in Uptown

Christiansburg. In addition, Wing delivery flights are performed around this test area.

• Corpus Christi, TX: Cole Park in Corpus Christi was used to conduct flights by LSUASC.

Figure 4: MAAP Operating Environments (MAAP shown on left, LSUASC shown on right)

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

10

3 UFT Execution

From summer 2022 to early spring 2023, MAAP and NYUASTS worked with their partners, the

FAA, and NASA to integrate their systems into the test network, test USS services functionality

and interoperability, define test cards and data collection approaches, and execute flight tests to

prepare for final showcase events. UFT was executed in a hybrid environment, through the use of

online collaboration software and simulated flights where appropriate, to help foster the

collaborative virtual environment to prepare for major test events (e.g., shakedowns and

showcases). This section describes these key activities conducted through stages of UFT.

3.1 Test Approach

UFT testing was conducted using live flights at all operating environments, while supplementing

with simulated operations where desired complexity may not have been capable with live flights

only. Complexity was a key element of UFT testing and is described in Section 3.1.1. Additionally,

to create representation of the real world, the UFT approach was designed to minimize scripting

to the greatest appropriate extent possible. The approach aimed for participants to gain situational

awareness and make decisions on planning and replanning using UTM services as events

happened, instead of following a plan that was defined in advance.

UFT participants and stakeholders were integrated and tested through a series of stages as shown

in Figure 5. At each stage, issues were identified, tracked, and solutions were developed and tested

as the project progressed to the next stage.

Figure 5: UFT Test Approach Stages

3.1.1 Complexity

For UFT activities, operational complexity was characterized through multiple perspectives,

including the following.

• Number of Interactions: The number of interactions can be categorized by instances

where one or more operations conflict with one another or constraints, driving the need for

coordination, deconfliction, and other actions/activities.

• Types of Interactions: The types of interactions are categorized as the interactions

between flights with varying types of operations as well as constraints.

• Operational Tempo: Operational tempo is categorized as the number of flights planned

and flown in an operational area within a given time window. Lower or higher operational

tempo may have varying impacts on operational complexity.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

11

• Operating Environment: Operating environment includes the environment that

operations are occurring within and the changes that may occur within that environment for

various reasons. UFT aimed to test and evaluate capabilities and standards in operating

environments of varying complexity to discover how effective the UTM services are as the

level of complexity changes.

3.2 Data Collection Approach

A Data Management Plan (DMP) was developed and agreed upon by UFT participants to support

data collection for UFT. Data collection identified in the DMP is for UFT analysis only and does

not imply any future FAA auditing needs. The DMP provided the Measures of Effectiveness

(MOEs), detailed a collection of metrics to be generated in support of the MOEs, and the use of

surveys to capture non-quantitative feedback from participants. Metric generation responsibility

was split between the FAA and test sites based upon their involvement in the relevant capability.

For the FAA, the metrics focused on additional capabilities beyond the ASTM standard and used

APIs to collect the data. For the test site, metrics focused on data relevant to testing the ASTM

USS Interoperability Standard, the mechanisms for data collection and metric generation were left

to the test sites to decide on the most effective approach to presenting this information. This

enabled the test sites to explore mechanisms they were familiar with and deemed appropriate.

Data collection mechanisms were developed, tested, and matured during the phases of UFT from

Shakedown 1 to final showcase. All data collection mechanisms were fully functioning by final

showcase week. Data was also collected during the shakedown events, but due to the nature of the

testing during these events, the analysis and visualizations in the section below do not include data

from the shakedowns. However, this analysis occurring throughout the shakedowns yielded useful

insights and lessons learned that helped to inform stakeholders as UFT progressed and were

considered as part of observations.

3.2.1 Measures of Effectiveness (MOEs)

For UFT, MOEs were developed to determine if the services, systems, and technologies

demonstrated during the associated activities were able to satisfactorily support operations

conducted in the test environments. The capabilities identified in Section 2 were used to develop

the MOEs listed in Table 2.

Table 2: UFT MOEs

Label

Description

UFT-MOE-1

Industry services supporting UTM effectively support UAS operations

staying safely separated.

UFT-MOE-2

UFT activities successfully test planning and coordination in operating

environments of varying complexity.

UFT-MOE-3

UFT participants validate the use of elevated priority operations.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

12

Label

Description

UFT-MOE-4

UFT activities successfully test data correlation service enhancements

providing authorized users additional information related to UAS.

UFT-MOE-5

UFT participants successfully test secure information exchange using

required IAM and message security capabilities.

To support the MOEs, a set of metrics were defined to provide data analysis in the areas of

complexity, priority operations, constraints, data correlation, and cybersecurity. In addition,

surveys and whitepapers were also created to support MOEs. Surveys were completed by various

UFT participants at various phases of the project. More information on the analysis and results of

the metrics and survey are provided throughout Section 4 and its subsections.

3.3 Entity Onboarding

Entity onboarding was the initial execution phase of the UFT project and was used to get all

partners integrated into the project. The entity onboarding phase consisted of a set of procedures

and forms completed by UFT partners. The list below provides a summary of activities conducted

during entity onboarding.

• Test sites provided contact information for partners to onboard to the project collaboration

tools, Slack (online communication) and Redmine (information exchange and project

management).

• USSs completed the entity onboarding form detailing which UTM roles/service they will

support.

• USSs used a DocuSign process to obtain International Aviation Trust Framework (IATF)

certificates from the FAA’s prototype Certificate Authority (CA).

• USSs provided details on partner use of the FAA’s Server Based Certificate Validation

Protocol (SCVP) web service, which is used for validation of certificates.

3.4 Checkout

For an activity such as UFT, one of the critical elements that helps to facilitate efficient,

streamlined, and secure integration into the UTM ecosystem is the checkout process. Checkout

processes test the capabilities of each of the actors involved in the activity and verify that each

meets a certain level of functionality. Checkout processes also help to verify interoperability across

all participants. For UFT, automated testing was used for USS functionality per the ASTM USS

Interoperability Standard and manual tests were used to test additional capabilities such as data

correlation and historical query. NYUASTS automated checkouts were conducted December 2022

through January 2023. For MAAP, automated checkouts were conducted January through

February 2023. The manual test for data correlation and historical query capabilities was

conducted February through March 2023 as partners implementations matured.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

13

3.4.1 Industry Led Checkout

In UFT, industry participants proposed that industry take on the responsibility of USS API

checkouts, specifically using the open source InterUSS automated test suite [5]. These tests were

used by USSs to validate alignment with the ASTM USS Interoperability Standard. The tests were

independently executed by each USS participating in UFT. NASA developed an extension to also

validate USSs’ implementation of message signing. Section 4.10 further expands the observations

from the use of the InterUSS test suite.

3.4.2 FAA Led Checkout

Manual tests were used to checkout data correlation and historical query capabilities. These tests

were performed between the NIEC lab and each entity providing data correlation and historical

query capabilities.

3.5 Shakedowns

The operational testing of UFT capabilities in the integrated test environment was conducted

through shakedown activities. These activities tested end-to-end systems through the operational

use cases. During the shakedown activities, UFT partners were able to exercise their vehicles and

systems to test the various standards, concepts, and operational requirements. In many cases, this

was the first validation of updated standards that were tested across different industry partners in

a live environment, revealing several challenges previously unknown to the UTM community. The

shakedown tests allowed partners to identify and resolve challenges and ensure the success of the

final showcase.

Challenges identified and overcome during shakedowns included the following.

• USS services checkout for services functions and interoperability

• USS FAA message signing checkout

• Message signing implementations

• Prioritization handling

• USS conformance monitoring

• USS support for inflight rerouting

The scenarios used during the shakedowns are outlined in Appendix A.1.

3.5.1 Shakedown 1

3.5.1.1 NYUASTS

From January 23–27, 2023, NYUASTS UFT Shakedown 1 was executed in Rome, NY at the

NYUASTS. This event was a live-fly exercise utilizing both BVLOS and Visual Line of Sight

(VLOS) operations. Additionally, simulated flights were used to supplement complexity.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

14

Deconfliction was handled for all operators through an assigned USS. A total of 76 operations

were conducted during Shakedown 1.

This event followed an approach where complexity was gradually added throughout the testing.

This approach allowed for issues to be identified and corrected during the phase of reduced

complexity. Each day of testing focused on a limited number of scenarios to provide depth to issue

identification and correction.

3.5.1.2 MAAP

The first shakedown at MAAP was a simulated event January 30–February 3, 2023, in which major

test points were conducted remotely. A test director located at MAAP oversaw the test and

managed the screen share and telephone conference line that served as the primary communication

method between all participants. Slack was used as a secondary communication method. All USSs

called into the conference line to assist in the testing.

ANRA, Collins, and Streamline Design flights teams utilized Software-in-the-Loop (SITL)

simulators for their aircraft. MAAP utilized a combination of Hardware-in-the-Loop (HITL) and

SITL simulators. For the UAS, HITL and SITL simulation was used as a stand-in for actual flights.

3.5.2 Shakedown 2

3.5.2.1 NYUASTS

NYUASTS UFT Shakedown 2 was executed in Rome, NY at the NYUASTS March 6–10, 2023.

Like the previous shakedown, it mixed simulated and live operations and used an approach of

increasing complexity over time. Through this shakedown activity, a subset of scenarios and

capabilities were identified to be run during the final showcase. The later days of the shakedown

were used to further test this subset of scenarios and capabilities in preparation from their use in

the final showcase.

All activities planned for testing were performed during the shakedown. The planned capabilities

for this more mature shakedown included UAS flights and telemetry submission, NASA scenario

integration, SCVP, priority operational intent submission, dynamic replanning, operator

notification, constraint submission, conformance monitoring, remote ID, data correlation queries,

DMP data collection, historical query, and metrics collection. Dynamic rerouting around injected

constraints was a primary focus of the week’s testing and the efforts helped to identify mature

capabilities as well as identify issues, which were solved prior to showcase execution. A total of

335 operations were conducted during Shakedown 2.

3.5.2.2 MAAP

MAAP’s second shakedown was conducted March 20–22, 2023, and March 29–31, 2023, at

Kentland Farms near Blacksburg, VA and in Corpus Christi, TX. All use cases and major test

points were validated via live and simulated flights, with a total of 73 flights and a total of 8.4

flight hours. Testing included iterations of scenarios which exercised all the needed interactions

for each use case.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

15

A key success of this shakedown was identifying and working through a challenge with historical

query. The remaining challenges identified were related to SDSP integration, InterUSS checkouts,

and remote ID information over the UTM network. Despite not affecting core UFT objectives,

they were identified and addressed during the shakedown testing. By the end of Shakedown 2, the

UTM functionality required for the showcase was in place and working as expected. A total of 65

operations were conducted during Shakedown 2.

3.6 Final Showcase

The final showcase events were executed in spring 2023 at the respective test sites. The NYUASTS

showcase was held on April 5, 2023, and focused its messaging on highly technical information

targeted to working-level participants. An Oneida County’s executive delivered pre-recorded

opening remarks.

The MAAP showcase was an executive-level event held on April 19, 2023, and split between the

Kentland Farms location in Blacksburg, VA and the Wing Nest in Christiansburg, VA. An opening

statement from the FAA Administrator was shared to open the event. FAA’s Office of

Communications, as well as local media—including NBC, CBS, and Fox affiliates—were present

at the second location to interview the ANG Assistant Administrator and MAAP Test Site Director.

Both events included demonstrations of multiple use cases. They also featured panels and Question

and Answer (Q&A) opportunities between the FAA, test site personnel, and industry partners.

Accompanying scenario videos were developed in a narrative style to support the event speakers

and translate complex technology for a varied audience. Table 3 and Table 4 show all use cases

and scenarios used during showcase activities. The scenarios tested during shakedown activities

were modified and curated to present the appropriate capabilities based on the showcase audience

and timeframe available.

Table 3: NYUASTS Showcase Scenarios

Scenario

Goals

Strategic Deconfliction

of UTM Operations

• Demonstrated UTM operational intent submission, constraint

submission, prioritization, strategic conflict detection,

conformance monitoring, broadcast remote ID

transmission/receipt and data correlation.

UTM Services

Supporting Dynamic

Replanning

• Highlighted operation deconfliction (without priority), advisory

constraints, and conformance monitoring

• The second phase focused on dynamic replanning

UTM Operations in

Environments of

Varying Complexity

• Demonstration of operation complexity

• Included Operation prioritization and in-route replanning

(rerouting) was demonstrated as well

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

16

Table 4: MAAP Showcase Scenarios

Scenario

Goals

UTM

Operations in

Environments

of Varying

Complexity

• Evaluate cooperative traffic management and various means of strategic

conflict resolution based on the ASTM USS Interoperability Standard

• Test how standards, technologies, and capabilities support mixed UAS

operations in complex environments

• Evaluate UTM services, such as strategic deconfliction, for criticality in

supporting BVLOS operations in complex environments to inform

evolving regulatory framework and future service qualification

Public Safety

UTM

Operations in

Environments

of Varying

Complexity

• Test cooperative operating practices for resolving conflicts

• Evaluate interoperability of having higher priority operations in the

vicinity of with lower priority operations

• Inform approaches for service qualification

Public Safety

Queries Due

to Concern of

UAS

Operations

• Test the FAA’s data correlation service

• Evaluate functionalities associated with:

o IAM

o Data and service access per user or entity permissions

o Message security

• Obtain feedback from stakeholders on tested data correlation capabilities

• Demonstrate use of the FAA’s historical data query capability using

location-based query parameters

• Obtain feedback from stakeholders on data correlation

• Obtain data on the implementation of message signing

During final showcase week activities, a total of 197 operations were flown at the NYUASTS test

environment in Rome, NY. At MAAP’s testing locations, a total of 147 operations were flown.

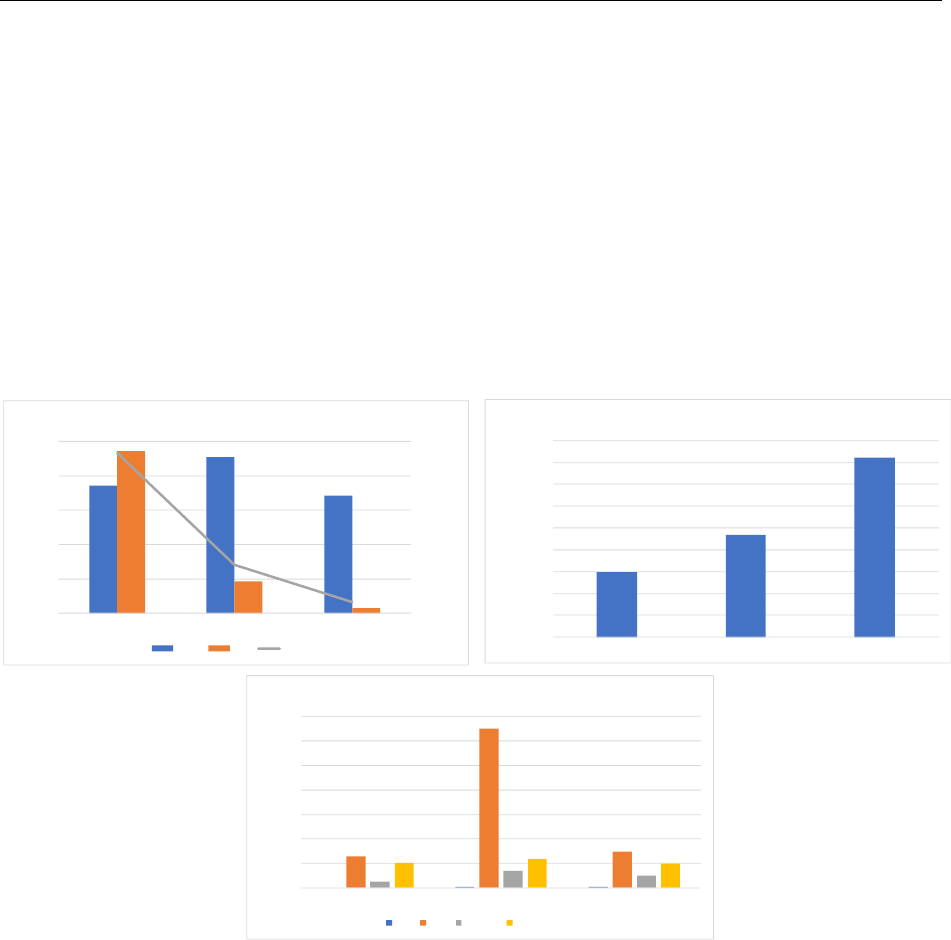

Figure 6 provides a breakdown of the operations supported by the USSs across each test site.

Figure 6: Operations Flown During Final Showcase Activities

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

17

4 Demonstrated Capabilities

This section provides an analysis and summary of data collected during UFT activities. Unless

otherwise stated, metrics provided for analysis are based on data collected during final showcase

week activities.

Table 5 shows how the demonstrated capabilities discussed in the following subsections map to

the MOEs described in Section 3.2.1. The table also highlights if data collection for the

demonstrated capability was done by the test site or the FAA.

Table 5: Demonstrated Capability to MOE Mapping

Demonstrated Capability

Section

MOE

Collected By

Operational Complexity

4.1

UFT-MOE-1, UFT-MOE-2, UFT-

MOE-3

Test Site

Strategic Deconfliction

4.3

UFT-MOE-1, UFT-MOE-2

Test Site

Priority Operations

4.3

UFT-MOE-3

Test Site

Conformance Monitoring

4.4

UFT-MOE-1, UFT-MOE-2

Test Site

Constraint Management

and Processing

4.5

UFT-MOE-1

Test Site

Data Correlation

4.6

UFT-MOE-4

FAA

Historical Query

4.7

UFT-MOE-1

FAA

Authorization Servers

4.8

UFT-MOE-5

N/A

Message Security

4.9

UFT-MOE-5

FAA

4.1 Operational Complexity

As described in Section 3.1.1, complexity was a key element of the UFT test approach. The goal

was to provide environments of varying complexity to test the effectiveness of UTM services as

operational complexity changes. Table 6 highlights the data collection metrics to show varying

operational complexity.

Table 6: Operational Complexity Metrics

Metric ID

Metric Title

Description

Supported

MOE

COMP-06

Tempo/density of

operations

How many operations, (live and

simulated), are occurring within an

operating area over time?

UFT-MOE-2

COMP-07

Tempo/density of

operations by state

How many operational intents are within

an operating area are in each operational

intent state (Accepted, Activated,

Nonconforming, Contingent) over time?

UFT-MOE-2

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

18

4.1.1 Analysis

4.1.1.1 NYUASTS

NYUASTS captured operational tempo/density (COMP-06) in both the North and South operating

areas. Figure 7 shows the operational density for the north and south operating areas. In the North,

the maximum density was 11 operations; in the South, it was 18 operations.

Figure 7: NYUASTS Operational Density

NYUASTS also captured tempo/density by operational intent state (COMP-07). The analysis is

broken down by day and shown in Figure 8.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

19

Figure 8: NYUASTS Density by State

4.1.1.2 MAAP

MAAP calculated density of operations (COMP-06) across multiple runs of four use cases. Some

uses cases were excluded from data capture since density was not a focus. Density was calculated

using the method described in Appendix D. A maximum density of 6 UA was reached in both the

0.2-square-mile and 0.4-square-mile areas. Figure 9 provides visualizations of the maximum

operational densities achieved per use case.

Figure 9: MAAP Tempo/Density by Use Case

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

20

MAAP also captured tempo/density by operational intent state (COMP-07). The analysis is broken

down by day and shown in Figure 10.

Figure 10: MAAP Density by State

4.2 Strategic Deconfliction

Strategic deconfliction is a service consisting of the arrangement, negotiation, and prioritization of

intended operational volumes, routes, or trajectories of UAS operations to minimize the likelihood

of airborne conflicts between operations. Strategic deconfliction is specifically highlighted in the

FAA UTM Concept of Operations (ConOps) v2.0 [6] as one of the key capabilities that UAS

operators use to maintain separation from one another and from constraints (e.g., obstacles,

weather, airspace constraints), in a cooperative traffic management ecosystem such as UTM. The

ASTM USS Interoperability Standard uses the USS role for strategic coordination to support

strategic deconfliction. Strategic coordination is comprised of two services: 1) Strategic Conflict

Detection, which determines if an operational intent conflicts with other operations intents, and 2)

Aggregate Operational Intent Conformation Monitoring, which monitors an operator’s aggregate

conformance with operational intents over time.

For UFT, all USSs utilized the ASTM USS Interoperability Standard. This standard provided the

framework for deconflicting operations with strategic conflict detection but leaves the approach to

strategic conflict resolution open for the individual USS to decide. Strategic conflict resolution is

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

21

the process of resolving conflicts through the modification of operational intents. Although there

is no absolute time threshold, strategic conflict resolution requires sufficient time before the

conflict to generate, coordinate, and implement the modification to the operational intent. Figure

11 shows an example of the deconfliction used, showing multiple operations from various USSs

successfully deconflicted without any overlaps.

Figure 11: Display of Strategically Deconflicted Operations

Table 7 highlights the key data collection metrics to assess strategic deconfliction and supporting

services/technologies.

Table 7: Strategic Deconfliction Metrics

Metric

ID

Metric Title

Description

Supported

MOE

COMP-

02

Attempts for

accepted operation

• How many attempts were needed by the

operator/Remote Pilot in Command (RPIC)

to obtain an accepted operation?

• Categorize by operator/RPIC and USS (min,

max, average, 95

th

percentile).

UFT-

MOE-2

COMP-

03

Operational replan

causes

• Number and percentage of replans by cause

(e.g., environmental, priority operation,

constraints, etc.).

• Replans occur after an operational intent is

at least in an Accepted state.

UFT-

MOE-2

COMP-

04

Operational replan

per operational area

• How many replans occur within an

operational area.

UFT-

MOE-2

COMP-

05

Operational replan

stage

• Number and percentage of replans occurring

pre-flight vs. in-flight.

UFT-

MOE-2

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

22

4.2.1 Analysis

4.2.1.1 NYUASTS

For flight activities, on average, UAS operators were able to achieve an accepted operation on

their initial attempt at planning (COMP-02), as shown in Table 8. This signifies that the USSs

were successful in supplying the UAS operators with enough situational awareness information

during the planning phase to effectively plan around existing operational intents and constraints.

Table 8: NYUASTS Attempts for Accepted Operation

USS

Min

Max

Average

ANRA

1

2

1

AX

1

3

2

CAL

1

2

1

OneSky

1

2

1

There are situations that may cause operations to be replanned after they are accepted. For UFT,

the two main causes of replans were constraints or higher priority operations (COMP-03), which

could occur both pre-flight and in-flight (COMP-05) and can be categorized by operating area

(COMP-04). These are highlighted by the metrics shown in Figure 12.

Figure 12: NYUASTS Replans by Cause and Stage

4.2.1.2 MAAP

For flight activities, on average, UAS operators were able to achieve an accepted operation on the

first attempt at planning (COMP-02), as shown in Table 9. This was aided by the approach that

allowed operators/RPICs to see all other operations in the USSs user interfaces.

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

23

Table 9: MAAP Attempts for Accepted Operation

USS

Min

Max

Average

95

th

Percentile

ANRA

1

6

1

1.75

Collins

1

1

1

1.00

OneSky

1

2

1

1.00

For UFT, the two main causes of replans were constraints or higher priority operations (COMP-

03), which could occur both pre-flight and in-flight (COMP-05) and are highlighted in Figure 13.

In total there were 10 replans at MAAP and 9 of them fell within the 0.2-square-mile and 0.4-

square-mile operating areas (COMP-04) and are highlighted in the heatmap in Figure 14.

Figure 13: MAAP Operational Intent Replans

Figure 14: MAAP Operational Intent Replan Heatmap

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

24

4.2.2 Observations

Table 10 contains strategic deconfliction related observations compiled from the test sites and UFT

participants.

Table 10: Strategic Deconfliction Observations

Area

Observations

Planning

Attempts

• The level of information provided to the operators (e.g., showing all existing

operational intents to the operator) allowed most operations to be accepted on

the first attempt.

• While the information sharing was successful, UFT identified an opportunity

to increase resilience in the UTM data exchanges and improve the

presentation of information to the operators.

Automation

• UFT identified potential limitations to manual deconfliction by operators

when the operational complexity continues to increase or deconfliction

becomes more complicated.

• Automated solutions could reduce the burden on the pilot and add efficiency

to the airspace. Any automated solution should balance the need for safety,

operational efficiency, and privacy of users.

• Feedback from participants showed that a means of negotiation between

USSs is important as operational complexity increases.

• Further USS and Ground Control Station (GCS) integration could be

beneficial for improving operator awareness during operations.

In-Flight

Replans

• Some USSs supported full in-flight replanning and avoided the need to land

the UA before they were able to replan.

• Support for in-flight replans could be beneficial as USS software continues to

mature.

COPs

• The addition of COPs and best practices for reasonable time to deconflict,

volume buffers, common resolution approaches would benefit the consistency

and efficiency of strategic conflict resolution.

4.3 Priority Operations

Strategic conflict detection, per the ASTM USS Interoperability Standard, assumes certain

regulations are established by the regulator in relation to operation priority. These regulations

include the identification of priorities of operations and whether conflicts/overlaps are allowed

within the same priority level. For traditional aviation, the FAA has existing rules in place, which

dictate when and where a certain flight may have priority over another. For the UTM environment,

the ASTM standard includes the concept of prioritization for small UAS operations, signified by

a priority integer in the operational intent without a specific structure or scheme. UFT explored

the technical approach to exchange prioritization data based on capabilities identified in the ASTM

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

25

standard. Any concepts implemented by UFT in this area should be viewed strictly from a research

perspective and not misinterpreted as any regulatory or policy decision having been made by the

FAA. For UFT, conflicts/overlap was not allowed within the same priority level, the first-planned

operation was given priority over subsequent operations.

The standard puts the prioritization scheme, priority levels, and attributes that characterize them

at the discretion of the regulator. Nonetheless, a lower priority operation must be planned not to

conflict with a higher priority operation [2]. At the time of UFT, the FAA has not determined a

formal prioritization scheme, so a generic numbering scheme was used. The generic priority

structure used integer numbers (e.g., between 0 to 40 with an increment of 10). The higher the

integer indicates the higher the priority. The scheme in UFT was solely intended to test the concept

and technology but should not be interpreted as any type of decision from the agency on this topic.

Table 11 highlights the key data collection metrics to assess priority operations and supporting

services/technologies.

Table 11: Priority Operations Metrics

Metric

ID

Metric Title

Description

Supported

MOE

PC-01

Elevated priority

conflicts detected

• Percentage/number of elevated priority

operations causing conflicts.

• Number of elevated priority operations

planned vs. number of conflicts detected.

UFT-MOE-3

PC-02

Replan time due

to higher priority

operation conflict

• How long does it take for an operator/RPIC

to replan its operation (accepted or later

state) due to a priority operation conflict?

• Categorize pre-flight vs. in-flight (min,

max, average, 95

th

percentile).

UFT-MOE-3

PC-03

Replan attempts

due to higher

priority operation

conflict

• How many attempts does it take for an

operator to successfully replan due to a

higher priority operation conflict?

UFT-MOE-3

4.3.1 Analysis

4.3.1.1 NYUASTS

During UFT activities at NYUASTS, priority operations were tested with all four USSs: ANRA,

AX Enterprize, CAL Analytics, and OneSky. Priority operations were tested by submitting lower

priority operations into the UTM ecosystem first, then submitting higher priority operations, which

required lower priority operations to be replanned. 101 elevated priority operations were filed

across the four USSs. 98 operations were impacted by the elevated priority operations. Table 12

shows the number of elevated priority operations created by the USSs and the number of conflicts

that were detected because of the elevated priority operations (PC-01).

UTM Field Test (UFT) Version 1.0

Final Report November 6, 2023

26

Table 12: Priority Ops Created vs. Conflicts Detected

USS

# Priority Ops

# of Conflicts Detected

ANRA

27

23

AX Enterprize

44

38

CAL Analytics

10

10

OneSky

20

27

Several replans were done due to elevated priority operations. The replans occurred both pre-flight

and in-flight with CAL Analytics and AX Enterprize supporting the in-flight replans. All replans

were accepted on the first attempt (PC-03). Time to replan metrics (PC-02) are provided in Table

13. Challenges with data collection and the human factors associated with planning resulted in a

wide range of values for the time it took to replan operational intents. These times should not be

interpreted as the typical amount of time for a UTM system to replan.

Table 13: Replan Time Due to Higher Priority Operation

Time to Replan in Seconds

Min

9.44

Max

410

Average

74.07

95

th

Percentile

168.75

4.3.1.2 MAAP

For MAAP, 16 elevated priority operations were filed via the OneSky and Collins USSs. 30

operations were impacted by elevated priority operations. 70% of the elevated priority operations

conflicted with other operations. Table 14 shows the number of elevated priority operations created

by the two USSs and the number of conflicts that were detected because of the elevated priority

operations (PC-01).

Table 14: Priority Ops Created vs. Conflicts Detected

USS

# Elevated Priority Ops

# of Conflicts Detected

Collins

12

24

OneSky

4

6

Seven replans were done due to elevated priority operations. Two of the seven occurred in-flight.

Due to limitations in some USSs software, the in-flight replan required the operator to the land the

aircraft before replanning, which greatly increased the total replan time. All replans were accepted