1

UNIT – I – Data communication and Computer networks – SITA1401

SCHOOL OF COMPUTING

DEPARTMENT OF INFORMATION TECHNOLOGY

2

DATA COMMUNICATION

Introduction to data communication - Network protocols & standards - Line configuration -

Topology -Transmission mode -Categories of networks - OSI model - Layers of OSI model -

TCP/IP Model – Transmission media - Guided media -Unguided media- Switching-Circuit

switching - Packet Switching.

1.1 INTRODUCTION

A network is a set of devices (often referred to as nodes) connected by

communication links. A node can be a computer, printer, or any other device capable

of sending and/or receiving data generated by other nodes on the network.

“Computer network’’ to mean a collection of autonomous computers

interconnected by a single technology. Two computers are said to be interconnected if

they are able to exchange information.

The connection need not be via a copper wire; fiber optics, microwaves, infrared, and

communication satellites can also be used.

Networks come in many sizes, shapes and forms, as we will see later. They are

usually connected together to make larger networks, with the Internet being the most well-

known example of a network of networks.

There is considerable confusion in the literature between a computer network and a

distributed system. The key distinction is that in a distributed system, a collection of

independent computers appears to its users as a single coherent system. Usually, it has a

single model or paradigm that it presents to the users. Often a layer of software on top of

the operating system, called middleware, is responsible for implementing this model. A

well-known example of a distributed system is the World Wide Web. It runs on top of

the Internet and presents a model in which everything looks like a document (Web page).

The effectiveness of a data communications system depends on four fundamental

characteristics

: delivery, accuracy, timeliness, and jitter.

I. Delivery. The system must deliver data to the correct destination. Data must be received

by the intended device or user and only by that device or user.

2. Accuracy. The system must deliver the data accurately. Data that have been altered in

transmission and left uncorrected are unusable.

3. Timeliness. The system must deliver data in a timely manner. Data delivered

late are useless. In the case of video and audio, timely delivery means delivering

data as they are produced, in the same order that they are produced, and without

significant delay. This kind of delivery is called real-time transmission.

4. Jitter. Jitter refers to the variation in the packet arrival time. It is the uneven delay in the

delivery of audio or video packets. For example, let us assume that video packets are sent

every 30 ms. If some of the packets arrive with 30-ms delay and others with 40-ms delay,

an uneven quality in the video is the result.

A data communications system has five components

1. Message: The message is the information (data) to be communicated. Popular forms of

3

4

information include text, numbers, pictures, audio, and video.

2. Sender:The sender is the device that sends the data message. It can be a computer,

workstation, telephone handset, video camera, and so on.

3.Receiver: The receiver is the device that receives the message. It can be a computer,

workstation, telephone handset, television, and so on.

4.Transmission medium: The transmission medium is the physical path by which a

message travels from sender to receiver. Some examples of transmission media

include twisted-pair wire, coaxial cable, fiber-optic cable, and radio waves.

5.Protocol: A protocol is a set of rules that govern data communications. It represents an

agreement between the communicating devices. Without a protocol, two devices may be

connected but not communicating, just as a person speaking French cannot be understood

by a person who speaks only Japanese.

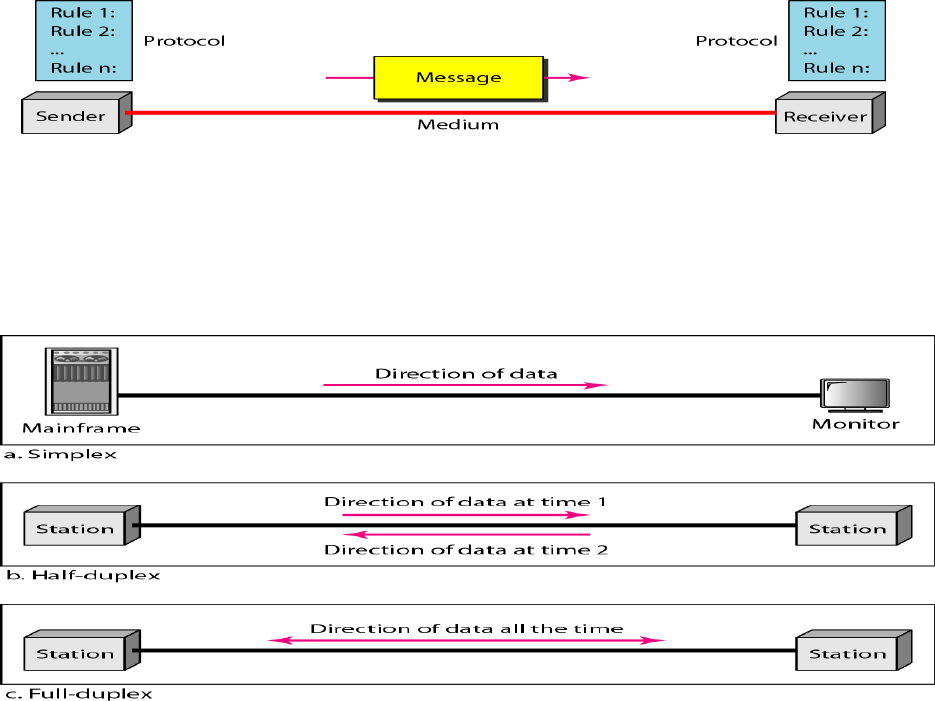

Figure 1.1

Components of Data Communications

Data Flow

Communication between two devices can be simplex, half-duplex, or full-duplex as

shown in Figure.

Figure 1.2 Data Flow Direction

Simplex

In simplex mode, the communication is unidirectional, as on a one- way street. Only one

of the two devices on a link can transmit; the other can only receive (Figure a). Keyboards

and traditional monitors are examples of simplex devices.

4

Half-Duplex

In half-duplex mode, each station can both transmit and receive, but not at the same time.

When one device is sending, the other can only receive, and vice versa (Figure b). Walkie-

talkies and CB (citizens band) radios are both half- duplex systems.

Full-Duplex

In full-duplex, both stations can transmit and receive simultaneously (Figure c). One

common example of full-duplex communication is the telephone network. When two

people are communicating by a telephone line, both can talk and listen at the same time.

The full-duplex mode is used when communication in both directions is required all the

time.

Network Criteria

A network must be able to meet a certain number of criteria. The most important of these

are performance, reliability, and security.

Performance

Performance can be measured in many ways, including transit time and response time. Transit

time is the amount of time required for a message to travel from one device to another. Response

time is the elapsed time between an inquiry and a response. The performance of a network

depends on a number of factors, including the number of users, the type of transmission medium,

the capabilities of the connected hardware, and the efficiency of the software.

Performance is often evaluated by two networking metrics: throughput and delay. We often need

more throughputs and less delay. However, these two criteria are often contradictory. If we try to

send more data to the network, we may increase throughput but we increase the delay because of

traffic congestion in the network.

Reliability: In addition to accuracy of delivery, network reliability is measured by the frequency

of failure, the time it takes a link to recover from a failure, and the network's robustness in a

catastrophe.

Security: Network security issues include protecting data from unauthorized access, protecting

data from damage and development, and implementing policies and procedures for recovery from

breaches and data losses.

Type of Connection

A network is two or more devices connected through links. A link is a

communications pathway that transfers data from one device to another.

There are two possible types of connections: point-to-point and multipoint.

Point-to- Point

A point-to-point connection provides a dedicated link between two devices. The entire

capacity of the link is reserved for transmission between those two devices. Most point-to-

point connections use an actual length of wire or cable to connect the two ends, but other

options, such as microwave or satellite links, are also possible

5

When you change television channels by infrared remote control, you are establishing a

point-to-point connection between the remote control and the television's control system.

Multipoint

A multipoint (also called multi-drop) connection is one in which more than two specific

devices share a single link

In a multipoint environment, the capacity of the channel is shared, either spatially

or temporally. If several devices can use the link simultaneously, it is a spatially

shared connection. If users must take turns, it is a timeshared connection.

Figure 1.3 Types of Connection

Physical Topology

The term physical topology refers to the way in which a network is laid out

physically.

Two or more devices connect to a link; two or more links form a topology. The topology

of a network is the geometric representation of the relationship of all the links and linking

devices (usually called nodes) to one another.

There are four basic topologies possible: mesh, star, bus, and ring

Figure 1.4 Types of

topologies

6

MESH

:

A mesh topology is the one where every node is connected to every other node in the

network.

Figure 1.5 Mesh Topology

A mesh topology can be a full mesh topology or a partially connected mesh

topology.

In a full mesh topology, every computer in the network has a connection to each of the

other computers in that network. The number of connections in this

network can be

calculated using the following formula (n is the number of computers in the network):

n(n-1)/2

In a partially connected mesh topology, at least two of the computers in the

network have connections to multiple other computers in that network. It is an

inexpensive way to implement redundancy in a network. In the event that one of the

primary computers or connections in the network fails, the rest of the network

continues to operate normally.

Advantages of a mesh topology

Can handle high amounts of traffic, because multiple devices can transmit data

simultaneously.

A failure of one device does not cause a break in the network or transmission of

data.

Adding additional devices does not disrupt data transmission between other

devices.

Disadvantages of a mesh topology

The cost to implement is higher than other network topologies, making it a less

desirable option.

Building and maintaining the topology is difficult and time consuming.

The chance of redundant connections is high, which adds to the high costs and

potential for reduced efficiency.

7

STAR:

Figure 1.6 Star Topology

A star network, star topology is one of the most common network setups. In this

configuration, every node connects to a central network device, like a hub, switch,

or computer. The central network device acts as a server and the peripheral devices act as

clients. Depending on the type of network card used in each computer of the star

topology, a coaxial cable or a RJ-45 network cable is used to connect computers together.

Advantages of star topology

Centralized management of the network, through the use of the central computer,

hub, or switch.

Easy to add another computer to the network.

If one computer on the network fails, the rest of the network continues to function

normally.

The star topology is used in local-area networks (LANs), High-speed LANs

often use a star topology with a central hub.

Disadvantages of star topology

Can have a higher cost to implement, especially when using a switch or router as

the central network device.

The central network device determines the performance and number of nodes the

network can handle.

If the central computer, hub, or switch fails, the entire network goes down and all

computers are disconnected from the network

8

BUS:

Figure 1.7 Bus Topology

A line topology, a bus topology is a network setup in which each computer and network

device are connected to a single cable or backbone.

Advantages of bus topology

1.

It works well when you have a small network.

2.

It's the easiest network topology for connecting computers or peripherals in a

linear fashion.

3.

It requires less cable length than a star topology.

Disadvantages of bus topology

1.

It can be difficult to identify the problems if the whole network goes down.

2.

It can be hard to troubleshoot individual device issues.

3.

Bus topology is not great for large networks.

4.

Terminators are required for both ends of the main cable.

5.

Additional devices slow the network down.

6.

If a main cable is damaged, the network fails or splits into two.

RING:

Figure 1.8 Bus Topology

Ring topology is a network configuration in which device connections create a circular

data path. In a ring network, packets of data travel from one device to the next until they

reach their destination. Most ring topologies allow packets to travel only in one direction,

9

called a unidirectional ring network. Others permit data to move in either direction, called

bidirectional.

The major disadvantage of a ring topology is that if any individual connection in the ring

is broken, the entire network is affected.

Ring topologies may be used in either local area networks (LANs) or wide area

networks (WANs).

Advantages of ring topology

1.

All data flows in one direction, reducing the chance of packet collisions.

2.

A network server is not needed to control network connectivity between each

workstation.

3.

Data can transfer between workstations at high speeds.

4.

Additional workstations can be added without impacting performance of the

network.

Disadvantages of ring topology

1.

All data being transferred over the network must pass through each workstation on

the network, which can make it slower than a star topology.

2.

The entire network will be impacted if one workstation shuts down.

3.

The hardware needed to connect each workstation to the network is more e

xp

en

s

ive

th

an

Eth

er

n

et

c

a

r

d

s

an

d

hu

b

s

/

s

w

itc

hes

.

Hybrid Topology

A network can be hybrid. For example, we can have a main star topology with each

branch connecting several stations in a bus topology as shown in Figure

Figure 1.9 Hybrid Topology

Types of Network based on size

The types of network are classified based upon the size, the area it covers and its physical

architecture. The primary network categories are PAN, LAN, WAN and MAN. Each

network differs in their characteristics such as distance, transmission speed, cables and

cost.

10

Figure 1.10

Types of Network

❖

PAN covers very small area-0-10-m

❖

LAN covers the smallest area -1 km(max)

❖

MAN covers an area larger than LAN and - 50 km(city)

❖

WAN comprises the largest of all- >50 km

PAN (Personal Area Network)

➢ PAN is a computer network formed around a person.

➢ It generally consists of a computer, mobile, or personal digital assistant.

➢ PAN can be used for establishing communication among these personal devices for

connecting to a digital network and the internet. Characteristics of PAN

➢ It is mostly personal devices network equipped within a limited area.

➢ Allows you to handle the interconnection of IT devices at the surrounding of a single user.

➢ PAN includes mobile devices, tablet, and laptop.

➢ It can be wirelessly connected to the internet called WPAN.

➢ Appliances use for PAN: cordless mice, keyboards, and Bluetooth systems

Advantages of PAN

➢

Here, are important pros/benefits of using PAN network:

➢

PAN networks are relatively secure and safe

➢

It offers only short-range solution up to ten meters

➢

Strictly restricted to a small area

Disadvantages of PAN

➢

Here are important cons/ drawback of using PAN network:

➢

It may establish a bad connection to other networks at the same radio bands.

➢

Distance limits.

LAN(L

ocal

A

rea

N

etwork

)

Figure 1.11 LAN

11

➢

A Local Area Network (LAN) is a group of computer and peripheral devices

which are connected in a limited area such as school, laboratory, home, and office

building.

➢

It is a widely useful network for sharing resources like files, printers, games, and

other application.

➢

The simplest type of LAN network is to connect computers and a printer in

someone's home or office.

➢

In general, LAN will be used as one type of transmission medium.

➢

It is a network which consists of less than 5000 interconnected devices across

several buildings.

Characteristics of LAN

➢

Here are important characteristics of a LAN network:

➢

It is a private network, so an outside regulatory body never controls it.

➢

LAN operates at a relatively higher speed compared to other WAN systems.

➢

There are various kinds of media access control methods like token ring and

ethernet.

Advantages of LAN

➢

Here are pros/benefits of using LAN:

➢

Computer resources like hard-disks, DVD-ROM, and printers can share local area

networks. This significantly reduces the cost of hardware purchases.

➢

You can use the same software over the network instead of purchasing the

licensed software for each client in the network.

➢

Data of all network users can be stored on a single hard disk of the server

computer.

➢

You can easily transfer data and messages over networked computers.

➢

It will be easy to manage data at only one place, which makes data more secure.

➢

Local Area Network offers the facility to share a single internet connection among

all the LAN users.

Disadvantages of LAN

➢

Here are the important cons/ drawbacks of LAN:

➢

LAN will indeed save cost because of shared computer resources, but the initial

cost of installing Local Area Networks is quite high.

➢

The LAN admin can check personal data files of every LAN user, so it does not

offer good privacy.

➢

Unauthorized users can access critical data of an organization in case LAN admin

is not able to secure centralized data repository.

➢

Local Area Network requires a constant LAN administration as there are issues

related to software setup and hardware failures

MAN(Metropolitan Area Network)

➢

A Metropolitan Area Network or MAN is consisting of a computer network across

an entire city, college campus, or a small region.

➢

This type of network is large than a LAN, which is mostly limited to a single

building or site. Depending upon the type of configuration, this type of network

allows you to cover an area from several miles to tens of miles.

12

Figure 1.11 MAN

Characteristics of MAN

➢

It mostly covers towns and cities in a maximum 50 km range

➢

Mostly used medium is optical fibers, cables

➢

Data rates adequate for distributed computing applications.

Advantages of MAN

➢

It offers fast communication using high-speed carriers, like fiber optic cables.

➢

It provides excellent support for an extensive size network and greater access to

WANs.

➢

The dual bus in MAN network provides support to transmit data in both directions

concurrently.

➢

A MAN network mostly includes some areas of a city or an entire city.

Disadvantages of MAN

➢

Here are drawbacks/ cons of using the MAN network:

➢

You need more cable to establish MAN connection from one place to another.

➢

In MAN network it is tough to make the system secure from hackers

WAN

(Wide Area Network)

WAN network system could be a connection of a LAN which connects with other LAN's

using telephone lines and radio waves. It is mostly limited to an enterprise or an

organization.

13

Figure 1.12 WAN

Advantages of WAN

➢

Here are the benefits/ pros of using WAN:

➢

WAN helps you to cover a larger geographical area. Therefore business offices

situated at longer distances can easily communicate.

➢

Contains devices like mobile phones, laptop, tablet, computers, gaming consoles,

etc.

➢

WLAN connections work using radio transmitters and receivers built into client

devices.

Disadvantage of WAN

➢

Here are drawbacks/cons of using WAN:

➢

The initial setup cost of investment is very high.

➢

It is difficult to maintain the WAN network. You need skilled technicians and

network administrators.

➢

There are more errors and issues because of the wide coverage and the use of

different technologies.

➢

It requires more time to resolve issues because of the involvement of multiple

wired and wireless technologies.

➢

Offers lower security compared to other types of networks.

Transmission Medium

➢ is a physical path between the transmitter and the receiver

➢ i.e it is the channel through which data is sent from one place to another.

Figure 1.13

Transmission Medium

14

➢ Transmission Media is broadly classified into the following types:

Figure 1.14 Types of

Transmission Medium

Guided Media: Guided media, which are those that provide a medium from one device

to another, include twisted-pair cable, coaxial cable, and fiber-optic cable.

It is also referred to as Wired or Bounded transmission media.

Guided media, which are those that provide a channel from one device to another.

Signals being transmitted are directed in a narrow pathway by using physical links.

Features:

High Speed

Secure

Used for comparatively shorter distances

There are 3 major types of Guided Media:

Twisted-pair and coaxial cable use metallic (copper) conductors that accept and transport

signals in the form of electric current.

Optical fibre is a cable that accepts and transports signals in the form of light.

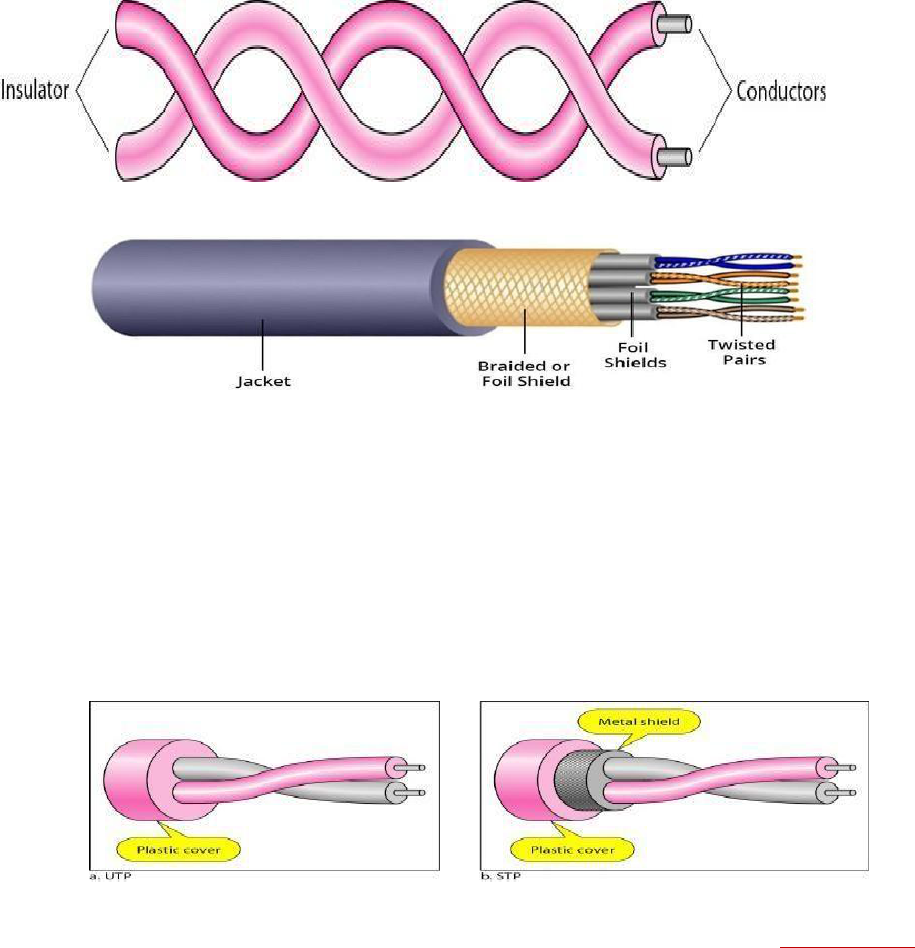

Twisted-Pair Cable: A twisted pair consists of two conductors (normally copper),

each with its own plastic insulation, twisted together. One of the wires is used to carry

signals to the receiver, and the other is used only as a ground reference.

15

Figure 1.15

Twisted-Pair Cable

Unshielded Versus Shielded Twisted-Pair Cable

The most common twisted-pair cable used in communications is referred to as

unshielded twisted-pair (UTP). STP cable has a metal foil or braided mesh covering

that encases each pair of insulated conductors. Although metal casing improves the

quality of cable by preventing the penetration of noise or crosstalk, it is bulkier and

moreexpensive.

Figure 1.16

unshielded twisted-pair (UTP) Shielded Twisted-Pair

The most common UTP connector is RJ45 (RJ stands for registered jack) Applications

Twisted-pair cables are used in telephone lines to provide voice and data channels.

Local-area networks, such as l0Base-T and l00Base-T, also use twisted-pair cables.

Coaxial Cable

Coaxial cable (or coax) carries signals of higher frequency ranges than those in twisted

pair cable. coax has a central core conductor of solid or stranded wire (usuallycopper)

enclosed in an insulating sheath, which is, in turn, encased in an outer conductor of metal

foil, braid, or a combination of the two. The outer metallic wrapping serves both as a

shield against noise and as the second conductor, which completes the circuit.This outer

conductor is also enclosed in an insulating sheath, and the whole cable is protected by a

plastic cover.

16

Figure 1.17

Coaxial cable

The most common type of connector used today is the Bayone-Neill-Concelman (BNe),

connector.

Applications

Coaxial cable was widely used in analog telephone networks,digital telephone networks

Cable TV networks also use coaxialcables.

Another common application of coaxial cable is in traditional Ethernet LANs

Fiber-Optic Cable

A fiber-optic cable is made of glass or plastic and transmits signals in the form of

light. Light travels in a straight line as long as it is moving through a single uniform

substance.

If a ray of light traveling through one substance suddenly enters another substance(of

a different density), the ray changes direction.

Bending of lightray

Figure 1.18

Bending of lightray

Optical fibers use reflection to guide light through a channel. A glass or plastic core is

surrounded by a cladding of less dense glass or plastic.

17

Figure 1.19

Optical fibers

Propagation Modes

Figure 1.20

Types of Modes

Multimode is so named because multiple beams from a light source move through the

core in different paths. How these beams move within the cable depends on the structure

of the core, as shown in Figure.

Figure 1.21

Types of Modes

In multimode step-index fiber, the density of the core remains constant from the center

to the edges. A beam of light moves through this constant density in a straight line

until it reaches the interface of the core and the cladding. The term step index refers to

the suddenness of this change, which contributes to the distortion of the signal as it

passes through the fiber.

18

A second type of fiber, called multimode graded-index fiber, decreases this distortion

of the signal through the cable. The word index here refers to the index of refraction.

Single-Mode: Single-mode uses step-index fiber and a highly focused source of light that

limits beams to a small range of angles, all close to the horizontal.

The subscriber channel (SC) connector, The straight-tip (ST) connector, MT-

RJ(mechanical transfer registered jack) is a connector

Applications

Fiber-optic cable is often found in backbone networks its wide bandwidth is cost-effective.

Some cable TV companies use a combination of optical fiber and coaxial cable, thus

creating a hybrid network.

Local-area networks such as 100Base-FX network (Fast Ethernet) and 1000Base-X

also use fiber-opticcable

Advantages and Disadvantages of Optical Fiber

Advantages Fiber-optic cable has several advantages over metallic cable (twisted pair

or coaxial).

Higher bandwidth

Less signal attenuation

Immunity to electromagnetic interference

Resistance to corrosive materials

Light weight

Greater immunity to tapping

Disadvantages of Fibre Optic Cable

Installation and maintenance

Unidirectional light propagation

High Cost

UNGUIDED MEDIA: WIRELESS

Unguided media transport electromagnetic waves without using a physical conductor.

This type of communication is often referred to as wireless communication.

Figure 1.22

Unguided media

Unguided signals can travel from the source to destination in several ways: ground

propagation, sky propagation, and line-of-sight propagation, as shown in Figure

19

Figure 1.23 Types of

Unguided media

Radio Waves

Electromagnetic waves ranging in frequencies between 3 kHz and 1 GHz are normally

called radio waves. Radio waves are omni directional. When an antenna transmits radio

waves, they are propagated in all directions. This means that the sending and receiving

antennas do not have to be aligned. A sending antenna sends waves that can be received

by any receiving antenna. The omni directional property has a disadvantage, too. The

radio waves transmitted by one antenna are susceptible to interference by another antenna

that may send signals using the same frequency or band.

Omni directional Antenna

Radio waves use omnidirectional antennas that send out signals in all directions. Based

on the wavelength, strength, and the purpose of transmission, we can have several types of

antennas. Figure shows an omnidirectional antenna.

Figure 1.24 Omni directional Antenna

20

Applications

The Omni directional characteristics of radio waves make them useful for multicasting, in

which there is one sender but many receivers. AM and FM radio, television, maritime

radio, cordless phones, and paging are examples of multicasting.

Microwaves

Electromagnetic waves having frequencies between 1 and 300 GHz are called

microwaves. Microwaves are unidirectional. The sending and receiving antennas need to

be aligned. The unidirectional property has an obvious advantage. A pair of antennas can

be aligned without interfering with another pair of aligned antennas Unidirectional

Antenna

Microwaves need unidirectional antennas that send out signals in one direction. Two types

of antennas are used for microwave communications: the parabolic dish and the horn

Figure 1.25 Uni directional Antenna

Applications:

Microwaves are used for unicast communication such as cellular telephones, satellite

networks, and wireless LANs

Infrared

Infrared waves, with frequencies from 300 GHz to 400 THz (wavelengths from 1 mm to

770 nm), can be used for short-range communication. Infrared waves, having high

frequencies, cannot penetrate walls. This advantageous

characteristic prevents interference between one system and another; a short- range

communication system in one room cannot be affected by another system in the next

room.

21

When we use our infrared remote control, we do not interfere with the use of the remote

by our neighbors. Infrared signals useless for long-range communication. In addition, we

cannot use infrared waves outside a building because the sun's rays contain infrared waves

that can interfere with the communication.

Applications:

Infrared signals can be used for short-range communication in a closed area using

line-of-sight propagation.

Protocols And Standards

Protocols (rules)

what to communicate, how to communicate and when to communicate

Key Elements of Protocols

1.Syntax - Structure of format of data

2.Semantics - Meaning of each section bits

3.Timing - When to send and how fast..

Network Standards

Networking standards define the rules for data communications that are needed

for interoperability of networking technologies and processes.

Standards help in creating and maintaining open markets and allow different

vendors to compete on the basis of the quality of their products while being

compatible with existing market products.

During data communication, a number of standards may be used simultaneously

at the different layers. The commonly used standards at each layer are −

Application layer − HTTP, HTML, POP, H.323, IMAP

Transport layer − TCP, SPX

Network layer −IP, IPX

Data link layer − Ethernet IEEE 802.3, X.25, Frame Relay

Physical layer

−

RS-232C (cable), V.92 (modem)

Standards

❖ Agreed upon rule

22

❖ A common set of rules

Categories of Standards

1.De facto - By convention or by fact

❖ These are the standards that are followed without any formal plan or approval by any

organization.

❖ They have come into existence due to traditions or facts.

❖ For example, the HTTP had started as a de facto standard.

2.De jure - By Law or by Government

❖ These standards are the ones which have been adopted through legislation by any officially

recognized standards organization.

❖ Most of the communication standards that are used today are de jure standards.

Some of the noted standards organizations are

❖ International Standards Organization (ISO)

❖ International Telecommunication Union (ITU)

❖ Institute of Electronics and Electrical Engineers (IEEE)

❖ American National Standards Institute (ANSI)

❖ Internet Research Task Force (IETF)

❖ Electronic Industries Association (EIA)

Switching

A network is a set of connected devices. Whenever we have multiple devices, we have the

problem of how to connect them to make one-to-one communication possible. One

solution is to make a point-to-point connection between each pair of devices (a mesh

topology) or between a central device and every other device (a star topology). These

methods, however, are impractical and wasteful when applied to very large networks.

The number and length of the links require too much infrastructure to be cost-

efficient, and the majority of those links would be idle most of the time.

A better solution is switching. A switched network consists of a series of

interlinked nodes, called switches. Switches are devices capable of creating temporary

connections between two or more devices linked to the switch. In a switched network,

some of these nodes are connected to the end systems (computers or telephones, for

example). Others are used only for routing. Figure shows a switched network.

23

Figure 1.26

switched network

We can then divide today's networks into three broad categories: circuit- switched

networks, packet-switched networks, and message-switched. Packet- switched networks

can further be divided into two subcategories-virtual-circuit networks and datagram

networks as shown in Figure.

Figure 1.27 Types of

switched network

CIRCUIT-SWITCHED NETWORKS

A circuit-switched network consists of a set of switches connected by physical

links. A connection between two stations is a dedicated path made of one or more links.

However, each connection uses only one dedicated channel on each link. Each link is

normally divided into n channels by using FDM or TDM. In circuit switching, the

resources need to be reserved during the setup phase; the resources remain dedicated for

the entire duration of data transfer until the teardown phase

24

Figure 1.28

circuit-switched network

Three Phases

The actual communication in a circuit-switched network requires three phases: connection

setup, data transfer, and connection teardown.

Setup Phase

Before the two parties (or multiple parties in a conference call) can communicate, a

dedicated circuit (combination of channels in links) needs to be established. Connection

setup means creating dedicated channels between the switches. For example, in Figure,

when system A needs to connect to system M, it sends a setup request that includes the

address of system M, to switch I. Switch I finds a channel between itself and switch IV

that can be dedicated for this purpose. Switch I then sends the request to switch IV, which

finds a

dedicated channel between itself and switch III. Switch III informs system M of system

A's intention at this time.

In the next step to making a connection, an acknowledgment from system M

needs to be sent in the opposite direction to system A. Only after system A receives

this acknowledgment is the connection established.

Data Transfer Phase

After the establishment of the dedicated circuit (channels), the two parties can transfer

data.

Teardown Phase

When one of the parties needs to disconnect, a signal is sent to each switch to release the

resources.

Efficiency

It can be argued that circuit-switched networks are not as efficient as the other two types

of networks because resources are allocated during the entire duration of the connection.

These resources are unavailable to other connections.

25

Delay

Although a circuit-switched network normally has low efficiency, the delay in this type of

network is minimal. During data transfer the data are not delayed at each switch; the

resources are allocated for the duration of the connection.

The total delay is due to the time needed to create the connection, transfer data, and

disconnect the circuit.

Switching at the physical layer in the traditional telephone network

uses the circuit-

switching

Packet Switched Networks

Packet switched networks are connectionless networks. Here, the message is divided and grouped

into a number of units called packets that are individually routed from the source to the destination

Figure 1.29 packet

-switched network

➢ The packet switching is a switching technique in which the message is sent in one go, but

it is divided into smaller pieces, and they are sent individually.

➢ The message splits into smaller pieces known as packets and packets are given a unique

number to identify their order at the receiving end.

➢ Every packet contains some information in its headers such as source address, destination

address and sequence number.

➢ Packets will travel across the network, taking the shortest path as possible.

➢ All the packets are reassembled at the receiving end in correct order.

➢ If any packet is missing or corrupted, then the message will be sent to resend the message.

➢ If the correct order of the packets is reached, then the acknowledgment message will be

➢ sent

26

Approaches Of Packet Switching

➢ There are two approaches to Packet Switching:

➢ Datagram Packet switching:

➢ It is a packet switching technology in which packet is known as a datagram, is considered

as an independent entity. Each packet contains the information about the destination and

switch uses this information to forward the packet to the correct destination.

➢ The packets are reassembled at the receiving end in correct order.

➢ In Datagram Packet Switching technique, the path is not fixed.

➢ Intermediate nodes take the routing decisions to forward the packets.

➢ Datagram Packet Switching is also known as connectionless switching.

DATAGRAM NETWORKS

In a packet-switched network, there is no resource reservation; resources are allocated on

demand. The allocation is done on a first come, first-served basis. When a switch receives

a packet, no matter what is the source or destination, the packet must wait if there are

other packets being processed. This lack of reservation may create delay. For example, if

we do not have a reservation at a restaurant, we might have to wait.

In a datagram network, each packet is treated independently of all others. Packets

in this approach are referred to as datagrams. Datagram switching is normally done at the

network layer.

Figure shows how the datagram approach is used to deliver four packets from

station A to station X. The switches in a datagram network are traditionally referred to as

routers.

The datagram networks are sometimes referred to as connectionless networks.

The term connectionless here means that the switch (packet switch) does not keep

information about the connection state. There are no setup or teardown phases. Each

packet is treated the same by a switch regardless of its source or destination.

A switch in a datagram network uses a routing table that is based on the destination

address. The destination address in the header of a packet in a datagram network remains

the same during the entire journey of the packet.

27

Figure 1.30 datagram

networks

Efficiency

The efficiency of a datagram network is better than that of a circuit-switched network;

resources are allocated only when there are packets to be transferred. Delay

There may be greater delay in a datagram network than in a virtual-circuit network.

Although there are no setup and teardown phases, each packet may experience a wait at a

switch before it is forwarded. In addition, since not all packets in a message necessarily

travel through the same switches, the delay is not uniform for the packets of a message.

Switching in the Internet is done by using the datagram approach to packet

switching at the network layer.

VIRTUAL-CIRCUIT NETWORKS

A virtual-circuit network is a cross between a circuit-switched network and a datagram network. It

has some characteristics of both.

Figure 1.31 virtual-circuit network

1.

As in a circuit-switched network, there are setup and teardown phases in addition to the

data transfer phase.

2.

Resources can be allocated during the setup phase, as in a circuit-switched network, or

28

on demand, as in a datagram network.

3.

As in a datagram network, data are packetized and each packet carries an address in the

header. However, the address in the header has local jurisdiction (it defines what should be

the next switch and the channel on which the packet is being carried), not end-to-end

jurisdiction.

4.

As in a circuit-switched network, all packets follow the same path established during

the connection.

5.

A virtual-circuit network is normally implemented in the data link layer, while a circuit-

switched network is implemented in the physical layer and a datagram network in the

network layer.

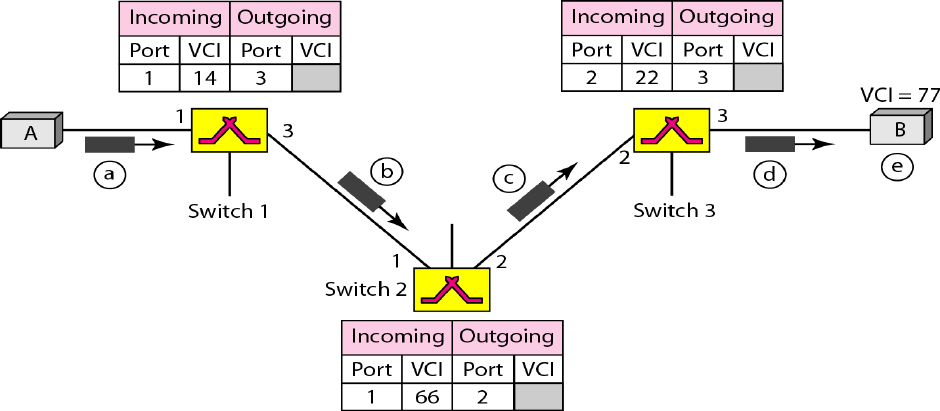

Addressing

In a virtual-circuit network, two types of addressing are involved: global and local

(virtual-circuit identifier).

Global Addressing

A source or a destination needs to have a global address-an address that can be unique in

the scope of the network.

Virtual-Circuit Identifier

The identifier that is actually used for data transfer is called the virtual-circuit identifier

(VCI). A VCI, unlike a global address, is a small number that has only switch scope; it is

used by a frame between two switches. When a frame arrives at a switch, it has a VCI;

when it leaves, it has a different VCl.

Figure shows how the VCI in a data frame changes from one switch to another. Note that a

VCI does not need to be a large number since each switch can use its own unique set of

VCls.

Figure 1.32 Virtual-Circuit Identifier

Three Phases

Three phases in a virtual-circuit network: setup, data transfer, and teardown. We first

discuss the data transfer phase, which is more straightforward; we then talk about the

setup and teardown phases.

29

Data Transfer Phase

Figure 1.33 Data Transfer Phase

To transfer a frame from a source to its destination, all switches need to have a

table entry for this virtual circuit. The table, in its simplest form, has four columns.

We show later how the switches make their table entries, but for the moment

we assume that each switch has a table with entries for all active virtual circuits.

Figure shows such a switch and its corresponding table.

Figure shows a frame arriving at port 1 with a VCI of 14. When the frame arrives,

the switch looks in its table to find port 1 and a VCI of 14. When it is found, the switch

knows to change the VCI to 22 and send out the frame from port 3.

Figure shows how a frame from source A reaches destination B and how its VCI changes

during the trip.

Figure 1.34

VCI changes during the trip

Each switch changes the VCI and routes the frame.

The data transfer phase is active until the source sends all its frames to the

destination. The procedure at the switch is the same for each frame of a message. The

process creates a virtual circuit, not a real circuit, between the source and destination.

30

Setup Phase

In the setup phase, a switch creates an entry for a virtual circuit. For example, suppose

source A needs to create a virtual circuit to B. Two steps are required: the setup request

and the acknowledgment.

Setup Request A setup request frame is sent from the source to the destination. Figure

shows the process.

Figure 1.35 Setup Phase

Source A sends a setup frame to switch 1.

a.

Switch 1 receives the setup request frame. It knows that a frame going from A

to B goes out through port 3. For the moment, assume that it knows the output port.

The switch creates an entry in its table for this virtual circuit, but it is only able to fill

three of the four columns. The switch assigns the incoming port (1) and chooses an

available incoming VCI (14) and the outgoing port (3). It does not yet know the

outgoing VCI, which will be found during the acknowledgment step. The switch then

forwards the frame through port 3 to switch 2.

b.

Switch 2 receives the setup request frame. The same events happen here as at

switch 1; three columns of the table are completed: in this case, incoming port (l),

incoming VCI (66), and outgoing port (2).

c.

Switch 3 receives the setup request frame. Again, three columns are completed:

incoming port (2), incoming VCI (22), and outgoing port (3).

d.

Destination B receives the setup frame, and if it is ready to receive frames from A,

it assigns a VCI to the incoming frames that come from A, in this case

77. This VCI lets the

destination know that the frames come from A, and not other sources.

31

Acknowledgment A special frame, called the acknowledgment frame, completes the

entries in the switching tables.

Figure shows the process.

Figure 1.36

Acknowledgment

Phase

a.

The destination sends an acknowledgment to switch 3. The acknowledgment

carries the global source and destination addresses so the switch knows which entry in

the table is to be completed. The frame also carries VCI 77, chosen by the destination

as the incoming VCI for frames from A. Switch 3 uses this VCI to complete the

outgoing VCI column for this entry. Note that 77 is the incoming VCI for destination

B, but the outgoing VCI for switch 3.

b.

Switch 3 sends an acknowledgment to switch 2 that contains its incoming VCI

in the table, chosen in the previous step. Switch 2 uses this as the outgoing VCI in the

table.

c.

Switch 2 sends an acknowledgment to switch 1 that contains its incoming VCI in

the table, chosen in the previous step. Switch 1 uses this as the outgoing VCI in the

table.

d.

Finally switch 1 sends an acknowledgment to source A that contains its incoming

VCI in the table, chosen in the previous step.

e.

The source uses this as the outgoing VCI for the data frames to be sent to destination

B.

Teardown Phase

In this phase, source A, after sending all frames to B, sends a special frame called a

teardown request. Destination B responds with a teardown confirmation frame. All

switches delete the corresponding entry from their tables.

Efficiency

32

In virtual-circuit switching, all packets belonging to the same source and destination travel

the same path; but the packets may arrive at the destination with different delays if

resource allocation is on demand.

Delay

In a virtual-circuit network, there is a one-time delay for setup and a one-time delay for

teardown. If resources are allocated during the setup phase, there is no wait time for

individual packets. Figure shows the delay for a packet traveling through two switches in a

virtual-circuit network

Figure 1.37

virtual-circuit network delay

Switching at the data link layer in a switched WAN is normally implemented by using

virtual-circuit techniques.

33

Comparison Table 1.1

34

Comparison Table 1.2

35

Comparison Table 1.3

36

OSI

OSI stands for Open Systems Interconnection

Created by International Standards Organization (ISO)

Was created as a framework and reference model to explain how different networking

technologies work together andinteract

It is not a standard that networking protocols must follow Each layer has specific

functions it is responsible for

All layers work together in the correct order to move data around a network

Figure 1.38

OSI Layers

Top to bottom

–All People Seem To Need Data Processing Bottom to top

–Please Do Not Throw Sausage Pizza Away

37

The interaction between layers in the OSI model

Figure 1.39

Interaction between layers in the OSI model

38

An exchange using the OSI model

Figure 1.40

An exchange using the OSI model

❖ 7 layers are classified into 3 sub layers

1. network support layer

2. transport layer

3. user support layer

❖ Layers 1,2,3- physical,data link and network are network support layers.

❖ Layer 4, the transport layer, links the two subgroups.

❖ Layers 5,6,7- session,presentation,and application are user support layers.

Physical Layer

❖ Physical layer is the bottom(layer 1) of OSI model.

❖ It is responsible for the actual physical connection between the devices.

❖ The physical layer is responsible for movements of individual bits from one node to

next.

Figure 1.41 Physical Layer

Functions of Physical Layer

❖ Convert bits to signals - involves a stream of bits (sequence of 0's and 1's) without any

39

interpretation. To be transmitted bits must be encoded into the signals - electrical or

optical.

❖ Bit synchronization - It is necessary to have synchronization between sender and

receiver at the bit level that is the clocks of the sender and the receiver must be

synchronized.

❖ Physical characteristics of interfaces and media - It defines the characteristics of the

interface between the devices and the transmission medium. It also defines the type of

transmission medium.

❖ Bit rate control- also defines the transmission rate(Data Rate) i.e. the number

of bits sent per second

❖ Line configuration - 1. Point-to-point 2. Multipoint

❖ Physical topology – Mesh,star,bus,ring

❖ Transmission mode - simplex, half-duplex, or full-duplex

Multiplexing - Code-division multiplexing (CDM) is a technique in which each channel

transmits its bits as a coded channel-specific sequence of pulses

Data Link Layer

❖ The data link layer is responsible for moving frames from one node to the next.

Figure 1.42 Data link Layer

Functions of Data Link Layer

❖ Framing:- divides the data from N/W layer into frames.

❖ Physical Addressing:- Add a header to the frame to define the physical address of the

source and the destination machines.

❖ Flow Control:- It is the traffic regulatory mechanism implemented by Data Link layer

that prevents the fast sender from drowning the slow receiver.

❖ Error Control:- It provides the mechanism of error control in which it detects and

retransmits damaged or lost frames.

❖ Feedback:- after transmitting the frames, the system waits for the feedback.

Network Layer

40

Figure 1.43 Network Layer

Functions of Network layer

❖ It is responsible for the source to destination delivery of a packets across multiple

networks.

❖ Routing:-Provide mechanism to transmit data over independent networks that are

linked together.

❖ Logical addressing:- Adds Logical addresses of sender and Receiver.

Transport Layer

It is responsible for source process to destination process delivery of entire message

Figure 1.43 Network Layer

Figure 1.44 Transport layer

Transport layer provides two types of services:

1) Connection Oriented Transmission: In this type of transmission the receiving device

sends an acknowledgment back to the source after a packet or group of packet is

received.

2) Connectionless Transmission: In this type of transmission the receiver does not

acknowledge receipt of a packet.

Functions of Transport Layer

❖ Segmentation and Reassembly: Divide the message received from Session layer into

Segments and number them to make a sequence for reassembly at the receiving side.

❖ Service point addressing: Transport layer makes sure that the message is delivered to

the correct process on destination machine.

❖ Error Control: Make sure that the entire message arrives without errors else retransmit.

Flow Control: Transport layer makes sure that the sender

Session Layer

❖ It is responsible for beginning, maintaining & ending the communication between two

devices, which is called session.

Figure 1.45 Session layer

41

Functions of Session Layer

❖ Establishment, maintaining and ending a session:

❖ Sends SYN packet – establish request

❖ Receives ACK & SYN- established

❖ To end – Sender sends ACK

❖ Dialog Control: The session layer allows two systems to enter into a dialog.

❖ Synchronization: Allows a process to add checkpoints to a stream of data.

Presentation Layer

❖ This layer is concerned with the syntax and semantics of the information exchanged

between two systems.

Figure 1.46 Presentation layer

Functions of Presentation Layer

❖ Data Translation: Encoding and Decoding Sender to Common format on Sending side

Common to Receiving format on Receiver side

❖ Data Encryption: For security and privacy purpose.

❖ Data Compression: Data compression reduces the number of bits

contained in the information.

Application Layer

❖ Provides User interfaces and support for Services, like e- mail, file transfer.

Figure 1.47 Application layer

Functions of Application Layer

❖ Network Virtual terminal: It allows a user to log on to

42

a remote host.

❖ File Transfer Access, and Management: This application allows a user to access files

in a remote host.

❖ Mail Services: This application provides various e-mail services.

❖ Directory Services: This application provides the distributed database

sources and access for global information about various objects and services.

Figure 1.48 OSI Layers functions

TCP/IP Model (Transmission Control Protocol/Internet Protocol)

–A protocol suite is a large number of related protocols that work together to

allow networked computers to communicate.

❖ The TCP/IP protocol suite was definedas having four layers:

❖ Host-to-network

❖ Internet

❖ Transport

❖ Application

43

Figure 1.48 TCP/IP

Relationship of layers and addresses in TCP/IP

Application Layer

Application layer protocols define the rules when implementing specific network

applications

Rely on the underlying layers to provide accurate and efficient data delivery Typical

protocols:

FTP – File Transfer Protocol

For file transfer

Telnet – Remote terminal protocol

For remote login on any other computer on the

network

SMTP – Simple Mail TransferProtocol

For mail transfer

HTTP – Hypertext TransferProtocol

For Web browsing

The Simple Mail Transfer Protocol is a communication protocol for electronic mail

transmission.

The File Transfer Protocol is a standard network protocol used for the transfer of

computer files between a client and server on a computer network.

The Domain Name System is a hierarchical and decentralized naming system for

computers, services, or other resources connected to the Internet or a private network.

Simple Network Management Protocol is an Internet Standard protocol for collecting

and organizing information about managed devices on IP networks and for modifying

that information to change device behavior.

44

Network File System is a distributed file system protocol allowing a user on a client

computer to access files over a computer network much like local storage is accessed.

Trivial File Transfer Protocol is a simple lockstep File Transfer Protocol which allows a

client to get a file from or put a file onto a remote host.

Encompasses same functions as these OSI Model layers Application Presentation Session

Transport Layer

TCP is a connection-oriented protocol

Does not mean it has a physical connection between sender and receiver

TCP provides the function to allow a connection virtually exists – also called virtual

circuit

UDP provides the functions:

Dividing a chunk of data intosegments

Reassembly segments into the originalchunk

Provide further the functions such as reordering and data resend Offering a reliable

byte-stream delivery service

Functions the same as the Transport layer inOSI

Synchronize source and destination computers to set up the session between the

respective computers

Internet Layer

The network layer, also called the internet layer, deals with packets and connects

independent networks to transport the packets across network boundaries. The network

layer protocols are the IP and the Internet Control Message Protocol (ICMP), which is

used for error reporting.

Host-to-network layer

T

h

e

Ho

s

t

-t

o

-

n

e

two

r

k

l

a

y

e

r

is

th

e

l

o

w

es

t

l

a

y

e

r

o

f

th

e

T

C

P

/

I

P

ref

e

r

ence

m

od

el.

I

t

co

m

b

in

es

th

e

li

n

k

l

a

y

e

r

an

d

th

e

p

h

ys

ic

a

l

l

a

y

e

r

o

f

th

e

I

S

O

/

O

S

I

m

od

el.

A

t

th

is

layer, data is transferred between adjacent network nodes in a WAN or between

nodes on the same LAN.

45

Comparison of OSI and TCP/IP

TEXT / REFERENCE BOOKS

1. Behrouz A. Fourouzan, “Data Communication and Networking”, McGraw-Hill

Education India Pvt. Ltd - New Delhi.

2. William Stallings, Data and Computer Communications (8th ed.), Pearson Education,

2007.

3. P.C. Gupta, Data Communications and Computer Networks, Prentice-Hall of India,

2006.

4. Andrew S. Tanenbaum, “Computer Networks”, Fourth Edition, Pearson.L. L. Peterson

and B. S. Davie, Computer Networks: A Systems Approach (3rd ed.), Morgan

Kaufmann, 2003.

46

QUESTIONS

PART A

1. List the components of data communication.

2. Define Protocol and standards

3. Explain the Classification of Data communications standards

4. What are the network criteria of Data Communication

5. Analyze the Line configuration

6. Define network Topology

7. Explain the types of Transmission mode

8. Organize the different Categories of networks

9. Define Transmission media and give the types

10. Analyze the switching circuit.

11. Differentiate OSI model and TCP/IP Model

PART B

1. Analyze the layers of OSI model and describe the responsibilities of each layer.

2. Distinguish different network topologies and explain each topology with a neat

diagram.

3. Analyze the TCP/IP model with a neat diagram? Explain the functions performed

in each layer.

4. Investigate the different types of Transmission media with a neat diagram.

5. Examine the different Categories of switching circuit.

47

SCHOOL OF COMPUTING

DEPARTMENT OF INFORMATION TECHNOLOGY

UNIT – II – Data communication and networks – SITA1401

48

UNIT II

DATA LIMK LAYER

Error detection and correction– Line Discipline - Flow Control Error control-

Medium Access Control – Ethernet -CSMA/CD - Wireless LAN - CSMA/CA –

IEEE 802.11, Bluetooth

1

.Providing services to the network layer:

1 Unacknowledged connectionless service.

Appropriate for low error rate and real-time traffic. Ex: Ethernet

1.

Acknowledged connectionless service.

Useful in unreliable channels, WiFi. Ack/Timer/Resend

2.

Acknowledged connection-oriented service.

Guarantee frames are received exactly once and in the right order. Appropriate over

long, unreliable links such as a satellite channel or a long- distance telephone circuit

2.

Framing:

Frames are the streams of bits received from the network layer into

manageable data units. This division of stream of bits is done by Data Link Layer.

3.

Physical Addressing

: The Data Link layer adds a header to the frame in order to define

physical address of the sender or receiver of the frame, if the frames are to be

distributed to different systems on the network.

4.

Flow Control:

A receiving node can receive the frames at a faster rate than it can

process the frame. Without flow control, the receiver's buffer can overflow, and

frames can get lost. To overcome this problem, the data link layer uses the flow

control to prevent the sending node on one side of the link from overwhelming the

receiving node on another side of the link. This prevents traffic jam at the receiver

side.

5.

Error Control:

Error control is achieved by adding a trailer at the end of the frame.

Duplication of frames are also prevented by using this mechanism. Data Link Layers

adds mechanism to prevent duplication of frames.

Error detection

: Errors can be introduced by signal attenuation and noise. Data Link

Layer protocol provides a mechanism to detect one or more errors. This is achieved by

adding error detection bits in the frame and then receiving node can perform an error

check.

Error correction

: Error correction is similar to the Error detection, except that

receiving node not only detects the errors but also determine where the errors have

occurred in the frame.

6.

Access Control

: Protocols of this layer determine which of the devices has control over

the link at any given time, when two or more devices are connected to the same link

.

7.

Reliable delivery

: Data Link Layer provides a reliable delivery service, i.e., transmits

the network layer datagram without any error. A reliable delivery service is

accomplished with transmissions and acknowledgements. A data link layer mainly

49

provides the reliable delivery

service over the links as they have higher error rates and they can be corrected locally,

link at which an error occurs rather than forcing to retransmit the data.

8.

Half-Duplex & Full-Duplex

: In a Full-Duplex mode, both the nodes can transmit the

data at the same time. In a Half-Duplex mode, only one node can transmit the data at

the same time.

FRAMING:

To provide service to the network layer, the data link layer must use the service

provided to it by the physical layer. What the physical layer does is accept a raw bit stream

and attempt to deliver it to the destination. This bit stream is not guaranteed to be error

free. The number of bits received may be less than, equal to, or more than the number of

bits transmitted, and they may have different values. It is up to the data link layer to

detect

and, if necessary, correct errors

. The usual approach is for the data link layer to break the

bit stream up into discrete frames and compute the checksum for each frame

(framing

).

When a frame arrives at the destination, the checksum is recomputed. If the newly

computed checksum is different from the one contained in the frame, the data link layer

knows that an error has occurred and takes steps to deal with it (e.g., discarding the bad

frame and possibly also sending back an error report).We will look at four framing

methods:

1.

Character count.

2.

Flag bytes with byte stuffing.

3.

Starting and ending flags, with bit stuffing.

4.

Physical layer coding violations.

Character count

method uses a field in the header to specify the number of characters in

the frame. When the data link layer at the destination sees the character count, it knows

how many characters follow and hence where the end of the frame is. This technique is

shown in Fig. (a) For four frames of sizes 5, 5, 8, and 8 characters, respectively.

A character stream. (a) Without errors. (b) With one error

The trouble with this algorithm is that the count can be garbled by a transmission error. For

example, if the character count of 5 in the second frame of Fig. (b) becomes a 7, the

destination will get out of synchronization and will be unable to locate the start of the next

frame. Even if the checksum is incorrect so the destination knows that the frame is bad, it

50

still has no way of telling where the next frame starts. Sending a frame back to the source

asking for a retransmission does not help either, since the destination does not know how

many characters to skip over to get to the start of the retransmission. For this reason, the

character count method is rarely used anymore.

Flag bytes with byte stuffing

method gets around the problem of resynchronization after

an error by having each frame start and end with special bytes. In the past, the starting and

ending bytes were different, but in recent years most protocols have used the same byte,

called a flag byte, as both the starting and ending delimiter, as shown in Fig. (a) as FLAG.

In this way, if the receiver ever loses synchronization, it can just search for the flag byte to

find the end of the current frame. Two consecutive flag bytes indicate the end of one frame

and start of the next one.

(a) A frame delimited by flag bytes (b) Four examples of byte sequences before and

after byte stuffing

It may easily happen that the flag byte's bit pattern occurs in the data. This situation

will usually interfere with the framing. One way to solve this problem is to have the

sender's data link layer insert a special escape byte (ESC) just before each ''accidental'' flag

byte in the data. The data link layer on the receiving end removes the escape byte before

the data are given to the network layer. This technique is called byte stuffing or character

stuffing.

Thus, a framing flag byte can be distinguished from one in the data by the absence or

presence of an escape byte before it.

What happens if an escape byte occurs in the middle of the data? The answer is

that, it too is stuffed with an escape byte. Thus, any single escape byte is part of an

escape sequence, whereas a doubled one indicates that a single escape occurred

naturally in the data. Some examples are shown in Fig. (b). In all cases, the byte

sequence delivered after de stuffing is exactly the same as the original byte sequence.

A major disadvantage of using this framing method is that it is closely tied to the use

of 8-bit characters. Not all character codes use 8-bit characters. For example UNICODE

51

uses 16-bit characters, so a new technique had to be developed to allow arbitrary sized

characters

Starting and ending flags, with bit stuffing

allows data frames to contain an arbitrary

number of bits and allows character codes with an arbitrary number of bits per character. It

works like this. Each frame begins and ends with a special bit pattern, 01111110 (in fact,

a flag byte). Whenever the sender's data link layer encounters five consecutive 1s in the

data, it automatically stuffs a 0 bit into the outgoing bit stream. This bit stuffing is

analogous to byte stuffing, in which an escape byte is stuffed into the outgoing character

stream before a flag byte in the data.

When the receiver sees five consecutive incoming 1 bits, followed by a 0 bit,

it

au

to

ma

tic

a

lly

d

e-

s

tu

ff

s

(

i.

e.

,

d

eletes

)

th

e

0

b

it.

J

u

s

t

a

s

b

yte

s

tu

ffi

n

g

i

s

completely

transparent to the network layer in both computers, so is bit stuffing. If the user data

contain the flag pattern, 01111110, this flag is transmitted as 011111010 but stored in the

receiver's memory as 01111110.

Fig:Bit stuffing. (a) The original data. (b) The data as they appear on the line.

(c) The data as they are stored in the receiver's memory after destuffing.

With bit stuffing, the boundary between two frames can be unambiguously recognized by

the flag pattern. Thus, if the receiver loses track of where it is, all it has to do is scan the

input for flag sequences, since they can only occur at frame boundaries and never within

the data.

Physical layer coding violations

method of framing is only applicable to networks in

which the encoding on the physical medium contains some redundancy. For example,

some LANs encode 1 bit of data by using 2 physical bits. Normally, a 1 bit is a high-low

pair and a 0 bit is a low-high pair. The scheme means that every data bit has a transition

in the middle, making it easy for the receiver to locate the bit boundaries. The

combinations high-

high and low-low are not used for data but are used for delimiting frames in some

protocols.

As a final note on framing, many data link protocols use combination of a character count

52

with one of the other methods for extra safety. When a frame arrives, the count field is

used to locate the end of the frame. Only if the appropriate delimiter is present at that

position and the checksum is correct is the frame accepted as valid. Otherwise, the input

stream is scanned for the next delimiter

ELEMENTARY DATA LINK PROTOCOLS

Simplest Protocol

It is very simple. The sender sends a sequence of frames without even thinking about the

receiver. Data are transmitted in one direction only. Both sender & receiver always ready.

Processing time can be ignored. Infinite buffer space is available. And best of all, the

communication channel between the data link layers never damages or loses frames. This

thoroughly unrealistic protocol, which we will nickname ‘‘Utopia,’’ .The utopia protocol

is unrealistic because

it does not handle either flow control or error correction

Stop-and-wait Protocol

53

It is still very simple. The sender sends one frame and waits for feedback from the receiver.

When the ACK arrives, the sender sends the next frame

It is Stop-and-Wait Protocol because the sender sends one frame, stops until it receives

confirmation from the receiver (okay to go ahead), and then sends the next frame. We still

have unidirectional communication for data frames, but auxiliary ACK frames (simple

tokens of acknowledgment) travel from the other direction. We add flow control to our

previous protocol.

NOISY CHANNELS

Although the Stop-and-Wait Protocol gives us an idea of how to add flow control to its

predecessor, noiseless channels are nonexistent. We can ignore the error (as we sometimes

do), or we need to add error control to our protocols. We discuss three protocols in this

section that use error control.

Sliding Window Protocols

:

1

Stop-and-Wait Automatic Repeat Request

2

Go-Back-N Automatic Repeat Request

3

Selective Repeat Automatic Repeat Request

1 Stop-and-Wait Automatic Repeat Request

To detect and correct corrupted frames, we need to add redundancy bits to our data

frame. When the frame arrives at the receiver site, it is checked and if it is corrupted, it is

silently discarded. The detection of errors in this protocol is manifested by the silence of

the receiver.

Lost frames are more difficult to handle than corrupted ones. In our previous

protocols, there was no way to identify a frame. The received frame could be the correct

one, or a duplicate, or a frame out of order. The solution is to number the frames. When the

receiver receives a data frame that is out of order, this means that frames were either lost or

duplicated

The lost frames need to be resent in this protocol. If the receiver does not respond

when there is an error, how can the sender know which frame to resend? To remedy this

problem, the sender keeps a copy of the sent frame. At the same time, it starts a timer. If the

timer expires and there is no ACK for the sent frame, the frame is resent, the copy is held,

and the timer is restarted. Since the protocol uses the stop-and-wait mechanism, there is

only one specific frame that needs an ACK

Error correction in Stop-and-Wait ARQ is done by keeping a copy of the sent frame and

retransmitting of the frame when the timer expires

In Stop-and-Wait ARQ, we use sequence numbers to number the frames. The

sequence numbers are based on modulo-2 arithmetic.

In Stop-and-Wait ARQ, the acknowledgment number always announces in

modulo-2 arithmetic the sequence number of the next frame expected.

54

Bandwidth Delay Product:

Assume that, in a Stop-and-Wait ARQ system, the bandwidth of the line is 1 Mbps, and 1

bit takes 20 ms to make a round trip. What is the bandwidth-delay product? If the system

data frames are 1000 bits in length, what is the utilization percentage of the link?

The link utilization is only 1000/20,000, or 5 percent. For this reason, for a link with a high

bandwidth or long delay, the use of Stop-and-Wait ARQ wastes the capacity of the link.

2. Go-Back-N Automatic Repeat Request

To improve the efficiency of transmission (filling the pipe), multiple frames must be

in transition while waiting for acknowledgment. In other words, we need to let more than

one frame be outstanding to keep the channel busy while the sender is waiting for

acknowledgment.

55

The first is called Go-Back-N Automatic Repeat. In this protocol we can send

several frames before receiving acknowledgments; we keep a copy of these frames until

the acknowledgments arrive.

In the Go-Back-N Protocol, the sequence numbers are modulo 2

m

, where m is

the size of the sequence number field in bits.

The sequence numbers range from 0 to

2 power m- 1. For example, if m is 4, the only sequence numbers are 0 through 15

inclusive.

The

sender window

at any time divides the possible sequence numbers into four

regions

.

The first region, from the far left to the left wall of the window, defines the

sequence numbers belonging to frames that are already acknowledged. The sender does

not worry about these frames and keeps no copies of them.

The second region, colored in Figure (a), defines the range of sequence numbers

belonging to the frames that are sent and have an unknown status. The sender needs to

wait to find out if these frames have been received or were lost. We call these

outstanding frames.

The third range, white in the figure, defines the range of sequence numbers for

frames that can be sent; however, the corresponding data packets have not yet been

received from the network layer.

Finally, the fourth region defines sequence numbers that cannot be used until the

window slides

The send window is an abstract concept defining an imaginary box of size 2

m

− 1

with three variables: S

f

, S

n

, and S

size

.

The variable Sf defines the sequence number of

the first (oldest) outstanding frame. The variable Sn holds the sequence number that will

be assigned to the next frame to be sent. Finally, the variable Ssize defines the size of the

window.

Figure (b) shows how a send window can slide one or more slots to the right

when an acknowledgment arrives from the other end. The acknowledgments in this

protocol are cumulative, meaning that more than one frame can be acknowledged by an

56

ACK frame. In Figure, frames 0, I, and 2 are acknowledged, so the window has slide to

the right three slots. Note that the value of Sf is 3 because frame 3 is now the first

outstanding frame.

The send window can slide one or more slots when a valid

acknowledgment arrives.

Receiver window:

variable Rn (receive window, next frame expected). The sequence

numbers to the left of the window belong to the frames already received and

acknowledged; the sequence numbers to the right of this window define the frames that